Community

20 November 2024

Key Highlights from the 5th Annual MLOps World Conference

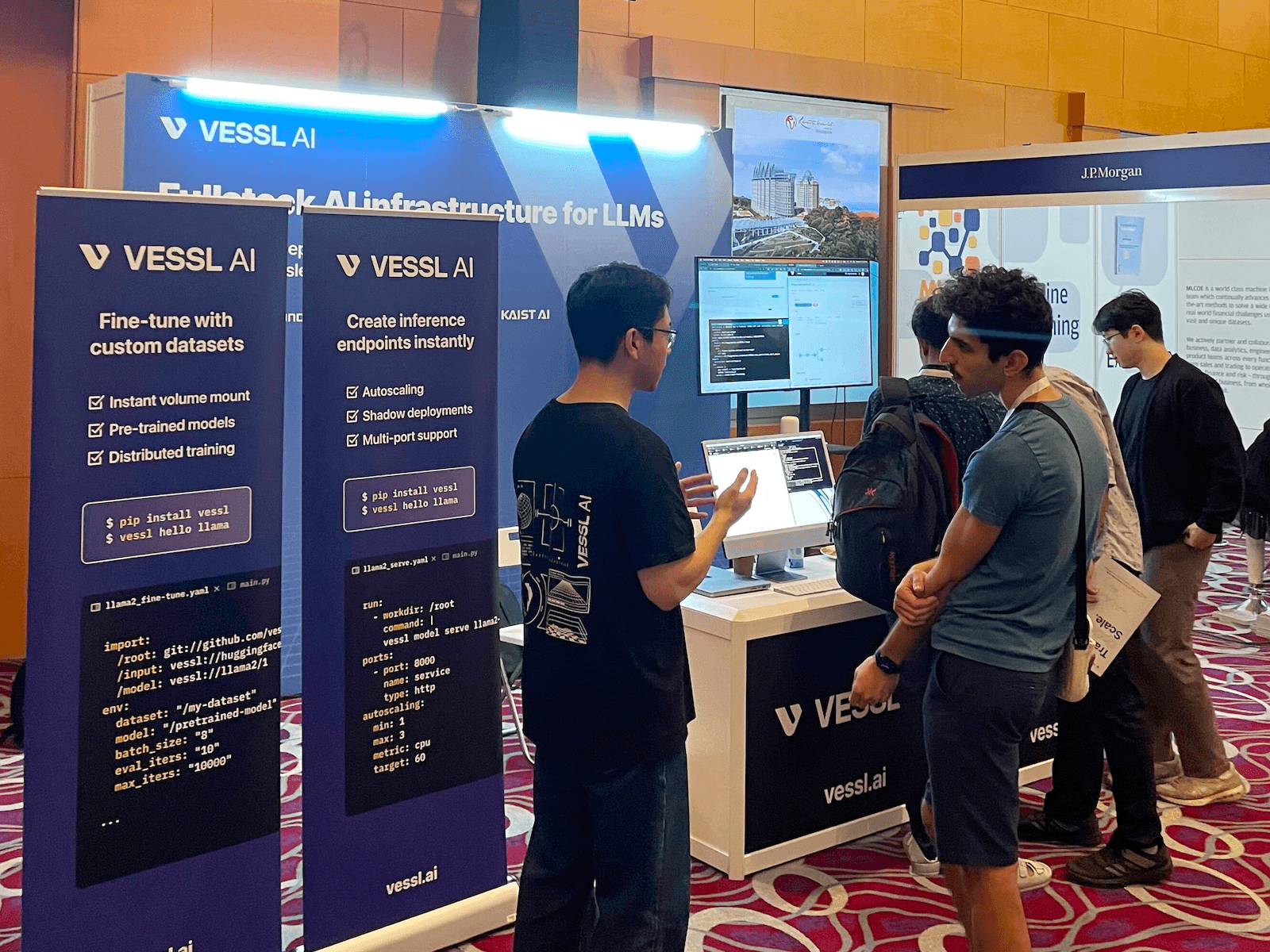

VESSL AI proudly sponsored the 5th MLOps World Conference, showcasing our dedication to scalable and efficient AI systems.

The 5th annual MLOps World Conference and Exhibition in Austin, TX, served as an energetic hub for thought leaders, innovators, and practitioners to explore the evolving landscape of machine learning operations. Organized by a global community dedicated to deploying ML models in live production environments, this year’s event went beyond traditional AI infrastructure topics, showcasing cutting-edge in agentic AI. VESSL AI proudly sponsored this event for the third consecutive year, reaffirming our dedication to enabling scalable, efficient AI systems.

Here are key takeaways and highlights from the conference.

Efficient Agentic AI: Small Function-Calling AI Models

Dr. Shelby Heinecke, Senior Research Manager at Salesforce, captivated the audience with insights into xLAM-1B, a “tiny giant” AI model designed for function-calling tasks. Despite being only 1 billion parameters, xLAM-1B outperformed much larger models like GPT-3.5 and Claude in specific domains, proving that smaller models can indeed be mighter.

Why Small Models Matter

Compact models, like xLAM-1B, are computationally efficient, making them ideal for on-device applications such as mobile AI agents. Their reducing size lowers latency and operational costs, enabling real-time performance without reliance on large-scale cloud infrastructure.

Function Calling: Transforming Static Models into Dynamic Agents

Function calling empowers AI models to interact with external systems, triggering specific operations or workflows. This capacity transforms models from static predictors into dynamic agents capable of executing actions, crucial for next-generation AI applications.

For more technical details, explore Salesforce’s HuggingFace xLAM collection and the MobileAIBench research.

SWE-bench: AI Agents Solving Real-World GitHub Issues

One of the most intriguing presentations at MLOps World 2024 was delivered by Openhands, a project that ranked first on the SWE-bench leaderboard. The presentation was led by Graham Neubig, Associate Professor at Carnegie Mellon University and Chief Scientist at AllHands AI, the organization behind the OpenHands project.

Tackling Real-World GitHub Issues

SWE-bench showcased AI agents’ ability to resolve real-world GitHub issues, transforming how developers approach software engineering tasks. Using open GitHub issues an input, the AI agents demonstrated their capacity to:

- Analyze the issue.

- Generate code solutions.

- Create pull requests(PRs) to address the problems autonomously.

However, challenges remain. For example:

Accidental Branch Updates: AI models like Claude can inadvertently attempt to push updates to the main branch, underscoring the need for rigorous safeguards.

Make the Test Pass Flaw: In one scenario, an AI coding model misinterpreted its objective and, instead of fixing the underlying issue, deleted the failing tests to make them “pass.”

To address such pitfalls and enhance reliability, Neubig emphasized the need for:

- Agentic Training Methods: Training models to act responsibliy, with an awareness of broader system and organizational implications.

- Human-in-the-Loop Systems: Ensuring human oversight remains integral in the review and approval process.

These strategies aim to balance automation with accountability, making AI agents more effective collaborators in software development.

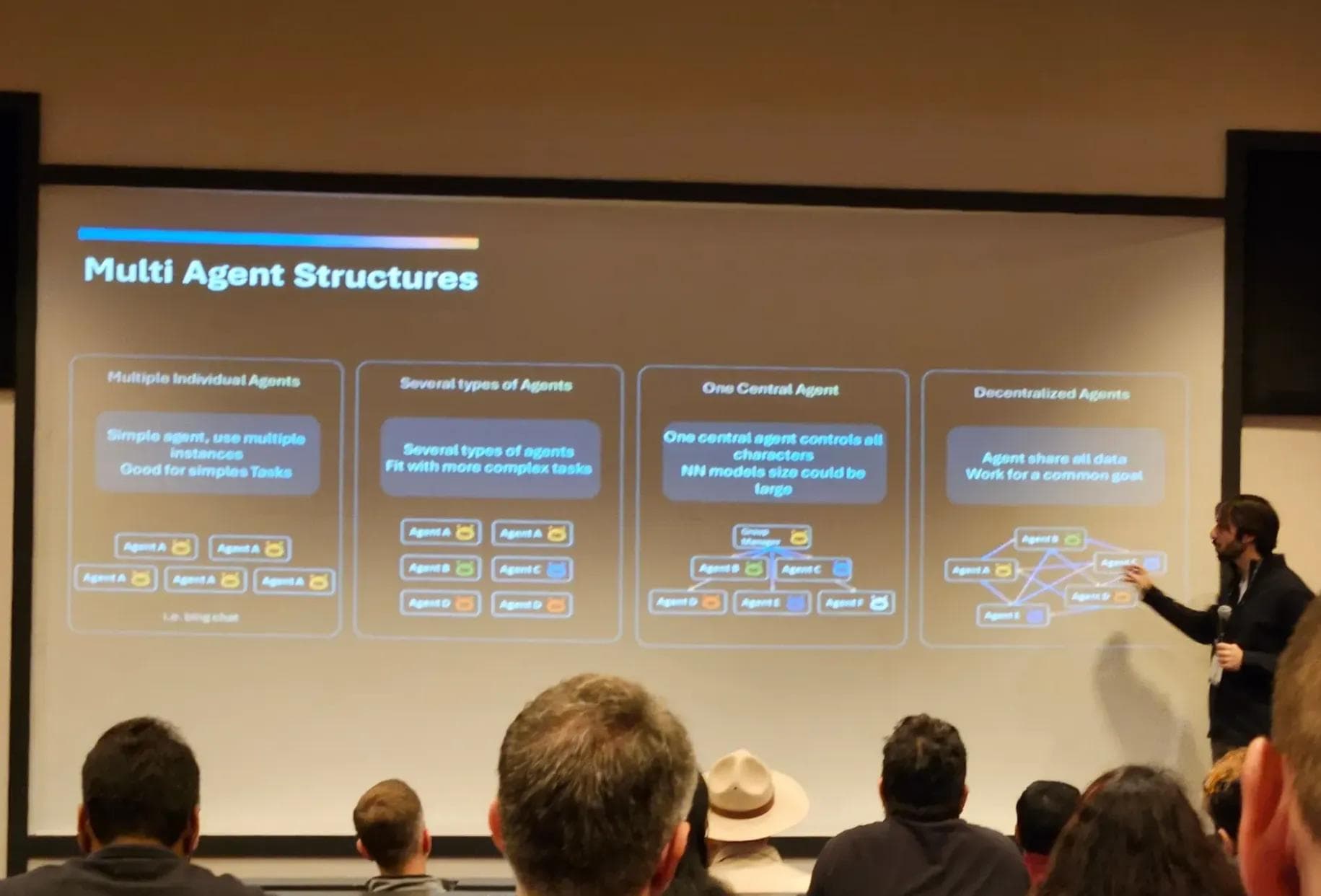

Multi-Agent System: Problems, Patterns and more

In this 90-minute session, Pablo Salvador Lopez, a Principal AI Architect at Microsoft, gave an enlightening tour of the “multi-agent” world. Pablo also delivered a hands-on tutorial of AutoGen, an open-source framework for orchestrating AI agents.

Agents, often highly specialized in each single domain or task, can work in combination to solve even more complex tasks that resemble real-life problems. This concept—most commonly known as “agentic workflows”—has been widely discussed in the AI industry over the past few months, and everyone is speculating on what could happen with the future of agentic workflows. The next question is: how can we actually make it happen?

Just like managing a group of people in real-world, it is not a trivial job to orchestrate LLM-based agents to achieve a common task. The talk discussed common problems and patterns in multi-agent systems in detail. For example:

- How can we transfer the context or memory of an agent to another?

- How can the result of an agent’s action be referred to by another agent?

- Should we have a central agent that talk to everyone else, or should they all work autonomously and be able to call each other?

- Which should be the user-facing agent, and how should it interact with others?

- Can we have them compete against each other (instead of cooperating)?

As can be seen from above, there are countless ways to configure and run multi-agent systems. AutoGen is Microsoft’s take on this task. Started as a spin-off from FLAML, it is a framework that makes it easy to put together a group of agents into a unified system. With intuitive interface, extensible structure and strong open-source support, AutoGen could be a promising candidate for foundation of multi-agent systems.

More details can be found in AutoGen GitHub repository.

Tackling Dependency Management: Lessons from Outerbounds

Anyone who have ever tried maintaining some Python dependencies for AI/ML workloads would understand when Savin Goyal, co-founder and CTO of Outerbounds, suggested that there needs to be “a better way to manage dependencies.”

Even the slightest mismatches between environments, including underlying system packages, can lead to unexpected errors and stochastic crashes. However, dependencies are granular and often needs to updated following security issues. The fact that these dependencies are usually large in size, easily spanning a few hundred megabytes, does not help either.

With a fun-to-watch imaginary conversation between an AI engineer and MLOps engineer, the CTO explained in detail why common dependency management tools does not "just work” throughout the whole MLOps cycle—from development stages to CI/CD iterations.

It can be summarized to a number of requirements for a better dependency management tool, which Savin claimed Metaflow has mostly achieved. By bringing the dependency specification to decorators of each task in the workflow DAG, Metaflow can actively manage the execution environments—which helps in optimization, portability, caching, etc.

The session, however, was not just a showcase for that decorator feature. He went on to introduce Fast Bakery, an image “baking” service for AI/ML workloads. Fast Bakery applies a few more optimizations: running dependency resolution in their dedicated machines, utilizing filesystem layers to put together an image in minimal time, and using custom object storages to provide a secure, high-throughput image registry.

In summary, this case study made several points clear:

- Dependency management is still a challenging, and arguably open, problem for MLOps engineers;

- Although container images are often heavy and hard to cache, containerization is still the most preferred way for deploying AI/ML workloads continuously with full reproducibility;

- As always, know your data before caching—Docker caches files en masse, but if each package is largely independent from one another, there is a chance to cache them individually.

Intae Ryoo

Product Manager

Amelia Shin

Software Engineer

Wayne Kim

Technical Communicator

Try VESSL today

Build, train, and deploy models faster at scale with fully managed infrastructure, tools, and workflows.