Community

08 December 2022

10 Highlights from NeurIPS 2022

A large language model and reinforcement learning are the two main keywords in NeurIPS 2022.

After three years of virtual conferences, NeurIPS 2022, the conference and workshop on Neural Information Processing Systems, held a hybrid conference in New Orleans from November 29. New Orleans, well-known as the birthplace of Jazz, hosted a major ML conference again following the successful hosting of CVPR, Conference on Computer Vision and Pattern Recognition, in June this year. This artistic city not only provided entertainment such as Jazz and exquisite paintings to machine learning researchers from various countries around the world but also showed the city’s culture of embracing diversity.

Affinity Workshops

On the first day of the conference, there were several affinity workshops representing various diversity, such as Women in Machine Learning, LatinX in AI, Queer in AI, NewInML, Black in AI, Indigenous in AI, Global South in AI, and North Africans in ML. Overall, the workshops were a good channel where researchers could start their conversations with others from all different culture based.

Deepmind

At NeurIPS 2022, numerous companies opened booths despite the hiring freeze. Among them, Deepmind stood out. Presented more than 40 papers, demonstrated demos at the booth, and participated in workshops with other partners. The recent publications of AlphaTensor, AlphaCode, and Self-supervised video learning using VITO are noteworthy. Please refer to this link↗ for the details.

Meta AI

Meta showed the ESM Metagenomic Atlas↗ demo related to protein folding and demonstrated various demos in the NLP part. The Casual Conversations Dataset v2↗ for a robust and fair AI system was released, and the CICERO↗ demo presented the first human-level in Diplomacy game. For the complete list, please refer to this link↗.

Meta’s PyTorch Conference↗ was also held as a satellite conference at Generation Hall, a little away from the convention center. On one side of the enormous venue, Meta employees answered the questions of on-site participants regarding the release of PyTorch 2.0. On the other side, the presentation from the giant stage was broadcast live to attendees online.

Offline multiagent behavioral analysis

Been Kim, Research Scientist at Google Brain, conducts a type of observational study on a multi-agent system. Their work “Beyond Rewards: a Hierarchical Perspective on Offline Multiagent Behavioral Analysis”↗ shows how the agent learns a behavior embedding, and a policy is generated conditioned on individual embeddings.

Language models and RL agents

Ph. D candidate in Stanford Jesse Mu’s three papers, including “Improving Intrinsic Exploration with Language Abstractions↗,” were accepted in NeurIPS 2022. It was a new approach to guide the exploration of RL agents using language models. The other two papers↗ are also positioned at the intersection of RL and NLP, so those interested should look for them.

Papers by Sergey Levine and his team

Professor Sergey Levine at UC Berkeley and his team proposed several RL papers, including Contrastive RL↗ and new model-based RL methods. Notable papers include: “Mismatched No More: Joint Model-Policy Optimization for Model-Based RL↗,” “Imitating Past Successes can be Very Suboptimal↗,” and “Data-Driven Offline Decision-Making via Invariant representation Learning↗.”

Poster session by Yann LeCun

Yann LeCun, a professor at NYU and Chief AI Scientist at Meta, had a poster session with the paper “Contrastive and Non-Contrastive Self-Supervised Learning Recover Global and Local Spectral Embedding Methods.↗” He demonstrates a unifying framework of self-supervised learning methods.

Dataset and benchmark

Datasets and benchmarks selected as outstanding papers also received a lot of attention. LAION-5B↗, a large-scale image-text dataset, was released, and MineDojo↗, which can simulate 1000 different tasks based on Minecraft, was also released in NeurIPS 2022. I expect many researchers to conduct engaging NLP and reinforcement learning research through this dataset and benchmark next year.

GitHub repository with links to Transformer-related papers

There was also an interesting GitHub repository↗ listing the 150 Transformer-related papers accepted at NeurIPS 2022, which is more than twice the number of papers last year. For those interested in Transformers works, go to this repository↗ and check it out.

Has it trained yet?

The “Has It Trained Yet (HITY)” workshop↗ provided practical tips for ML researchers to train a large language model. Susan Zhang from Meta AI delivered a series of trials and errors with her intuition behind each experiment while training the OPT-175B language model. The experiment log of training with 1024 A100 GPUs for three months while adjusting hyperparameters and hardware problems was quite intriguing to ML engineers.

Conclusion

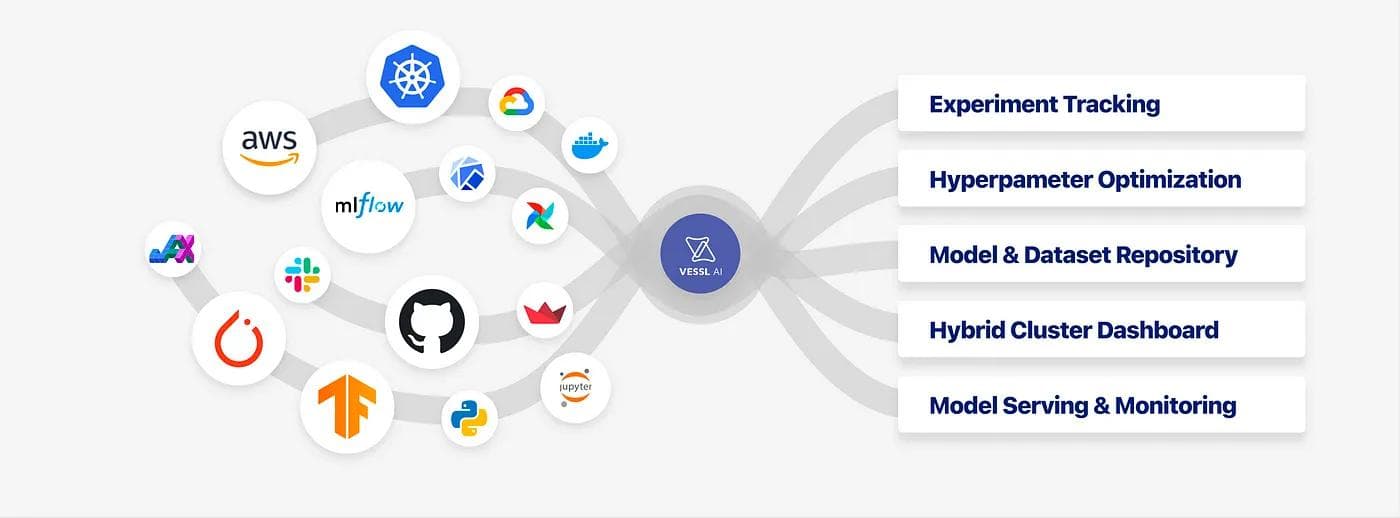

VESSL AI met energetic ML researchers and industry officials in various domains such as communications, finance, and health care at NeurIPS 2022. Our team will attend more conferences next year and listen to users’ voices directly to develop global standard MLOps services.

—

Thank you the Asan Nanum Foundation for sponsoring this trip.

Intae Ryoo

Product Manager

Try VESSL today

Build, train, and deploy models faster at scale with fully managed infrastructure, tools, and workflows.