Machine Learning

28 December 2023

5 Highlight papers from EMNLP 2023 — Try them out at VESSL Hub

VESSL AI was at EMNLP 2023 — Here are 5 papers that we found the most interesting

With the flood of new LLMs, academic conferences on natural language processing gained immense interest. With over a 35% increase in the number of accepted papers, EMNLP is no exception.

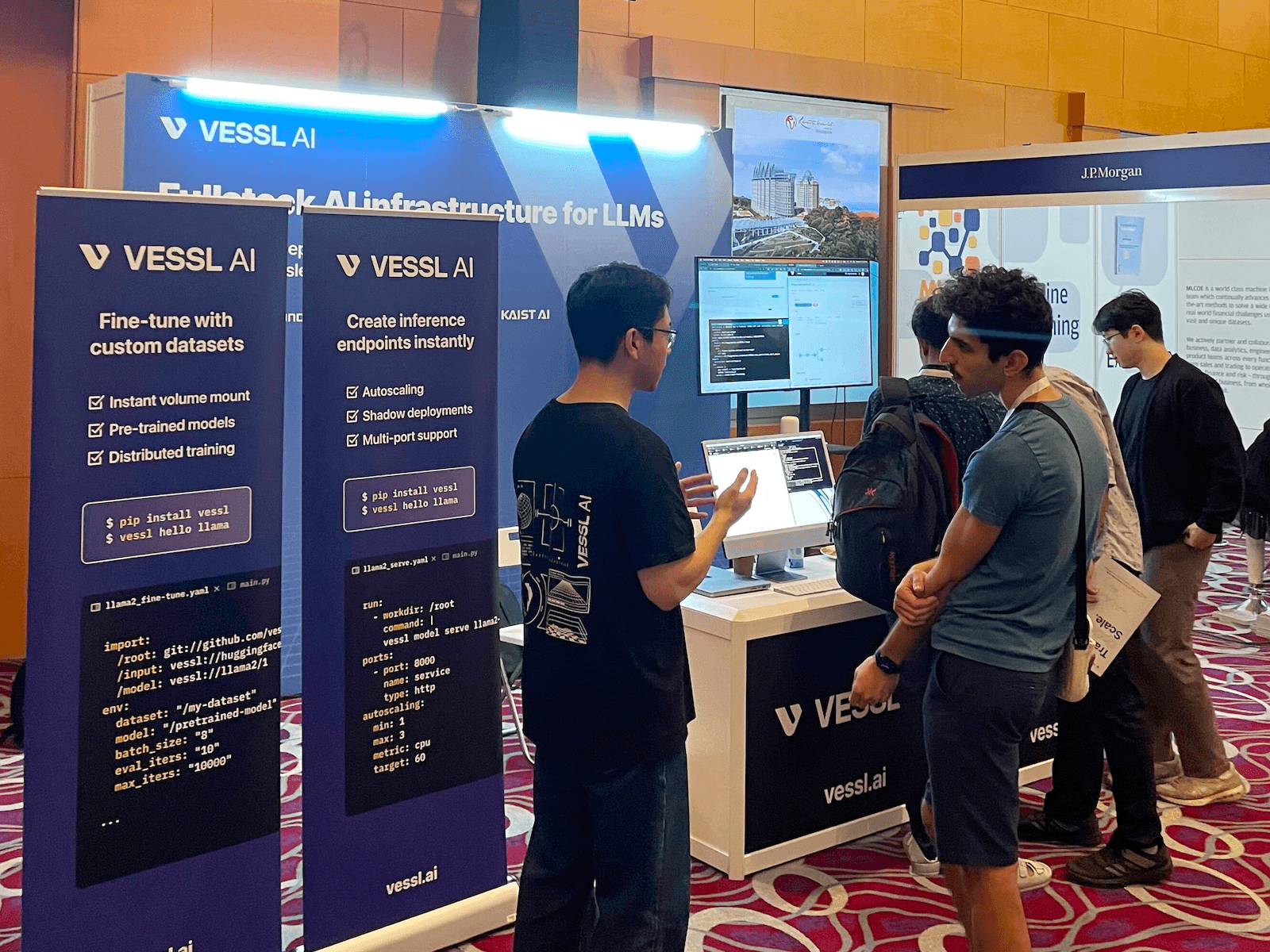

Our team was in Singapore last week for EMNLP 2023, showcasing our newest product VESSL Hub↗ and introducing our free Academic plan↗ to researchers around the world at our booth. While we were there, we also had a chance to talk to some of the authors and glance through their posters, including the 30 best and outstanding papers↗.

Here are the 5 papers that we found the most interesting. We prepared a run-proof replica of these papers & codes that you can launch instantly on our GPU cloud at VESSL Hub.

Run Best Papers & Outstanding Papers from EMNLP 2023

Explore papers and code presented at EMNLP, CVPR, NeurIPS, and more at VESSL Hub.

- ViPE: Visualise Pretty-much Everything

- Vec2Text: Text Embeddings Reveal (Almost) As Much As Text

- Ties Matter: Meta-Evaluating Modern Metrics with Pairwise Accuracy & Tie Calibration

1. ViPE: Visualise Pretty-much Everything↗

This paper discusses the limitations of recent text-to-image models like Stable Diffusion for expressing figurative and non-literal expressions. There have been attempts to overcome these limitations by using human annotations — datasets composed of multimodal idioms, metaphors, and similes like IRFL↗ are some of the examples. ViPE aims to mimic the creative thoughts and nuanced emotions of human communication. ViPE is a set of language models trained on a large-scale dataset of lyrics with noisy visual descriptions, generated by GPT-3.5 without human annotations or images.

Recently, some chatbots like Pi by Inflection AI↗ and Luda Lee by our customer Scatter Lab↗ have been differentiating themselves from ChatGPT by adding human attributes. With the recent release of models like ViPE that exhibit an understanding of figurative expressions comparable to human experts, we might see more interactive versions of these Emotional AI services and other downstream applications ranging from music video and caption generation.

See ViPE in action at VESSL Hub →↗

2. PaperMage: A Unified Toolkit for Processing, Representing, and Manipulating Visually-Rich Scientific Documents↗

This paper introduces PaperMage, an open-source Python toolkit that unifies disparate state-of-the-art NLP and CV models into a single Python framework. Scientific documents are often harder to process — they are uniquely structured, visually rich, full of symbols, and come in difficult-to-use formats like PDFs. The authors aim to address these challenges by providing a unified, user-friendly, end-to-end framework for analyzing and processing scientific documents.

from papermage import CoreRecipe

recipe = CoreRecipe ()

doc = recipe.run (\"paper.pdf\")

doc.captions[0].text

# " Figure 1. ..."The rise of sLLM like the recent Phi-2 from Microsoft↗ highlights the importance of high-quality datasets that can often be found in scientific papers. Tools like PaperMage, and previously LayoutParser can help data scientists and engineers quickly extract “textbook quality”↗ data that can be used for attributed QA systems or search engines for the latest scientific research. PaperMage is currently in production, powering Semantic Scholar↗, a free AI-powered research tool for scientific literature by the Allen Institute for AI.

Checkout PaperMage in action at VESSL Hub →↗

3. Cross-Lingual Consistency of Factual Knowledge in Multilingual Language Models↗

Multilingual large-scale Pretrained Language Models (PLMs) have been found to contain significant amounts of factual knowledge, but there are notable differences in knowledge consistency across different languages. This means you may receive different or entirely wrong answers depending on the language you use to enter a prompt. To address this, the authors propose a metric called Ranking-based Consistency (RankC) to evaluate the cross-lingual consistency of factual knowledge in various multilingual PLMs.

The authors suggest that cross-Lingual consistency of a language model may depend on vocabulary overlap of different language (than having similar grammar or word order), unlike how many assume, this consistency may not improve dramatically by increasing the model size. This papers highlight that the level of information accuracy of language models differs by the language background of of the users, and that this limitation may still be present in the largest GPT-3 or Llama2-scale language models.

You can check RankC of different languages at VESSL Hub →↗

4. Vec2Text: Text Embeddings Reveal (Almost) As Much As Text↗

The paper discusses how embeddings even the those of texts that hold private information can be “recovered” into an original text. The authors share a multi-step method that can re-embed up to 92% of 32-token text inputs through “controlled generation”. While training a decoder to invert embeddings gives you some similar text with some overlapping words, training an encoder-decoder inversion model that recuresively corrects and re-embeds text can get much of original.

Here, the author shares a case in which such model recovered personal information like full name from clinical notes. We’ve seen a few experiments like this that inverts OpenAI's embedding-ada-002 model↗ to reconstruct input texts from just embeddings.

See how the Vec2Text performs at VESSL Hub →↗

5. Ties Matter: Meta-Evaluating Modern Metrics with Pairwise Accuracy and Tie Calibration↗

Kendall's τ is a statistical measure commonly used to (meta-) evaluate how well machine translation evaluation metrics assess individual translations. However, Kendall's tau comes with a limitation: it can be sensitive to tied rankings, where two or more items have the same rank. With advanced models, the output translations might be very close in quality, leading to frequent ties in ranking, and thereby making Kendall’s tau unsuitable for more fine-grained, nuanced distinctions in translation quality.

The authors address the shortcomings of Kendall's tau, a notable issue in LLM-based metrics like GEMBA that predicts many ties. They suggest an alternative approach that uses pairwise accuracy to give metrics credit for correctly predicting ties, in combination with a tie calibration procedure that automatically introduces ties into metric scores, enabling fair comparison between metrics that do & do not predict ties.

Calculate the proposed pairwise accuracy with tie calibration at VESSL Hub →↗

VESSL for Academic

Our free academic plan is dedicated to helping graduate students and faculty members set up a SLURM alternative job scheduler with zero maintenance overheads. Apply now to get access↗.

- Run GPU-backed training jobs and notebook servers instantly

- Integrate lab-wide clouds and on-premise clusters with a single command

- Monitor GPU usage down to each node

Yong Hee

Growth Manager

Lucas

Software Engineer

Try VESSL today

Build, train, and deploy models faster at scale with fully managed infrastructure, tools, and workflows.