Machine Learning

14 August 2024

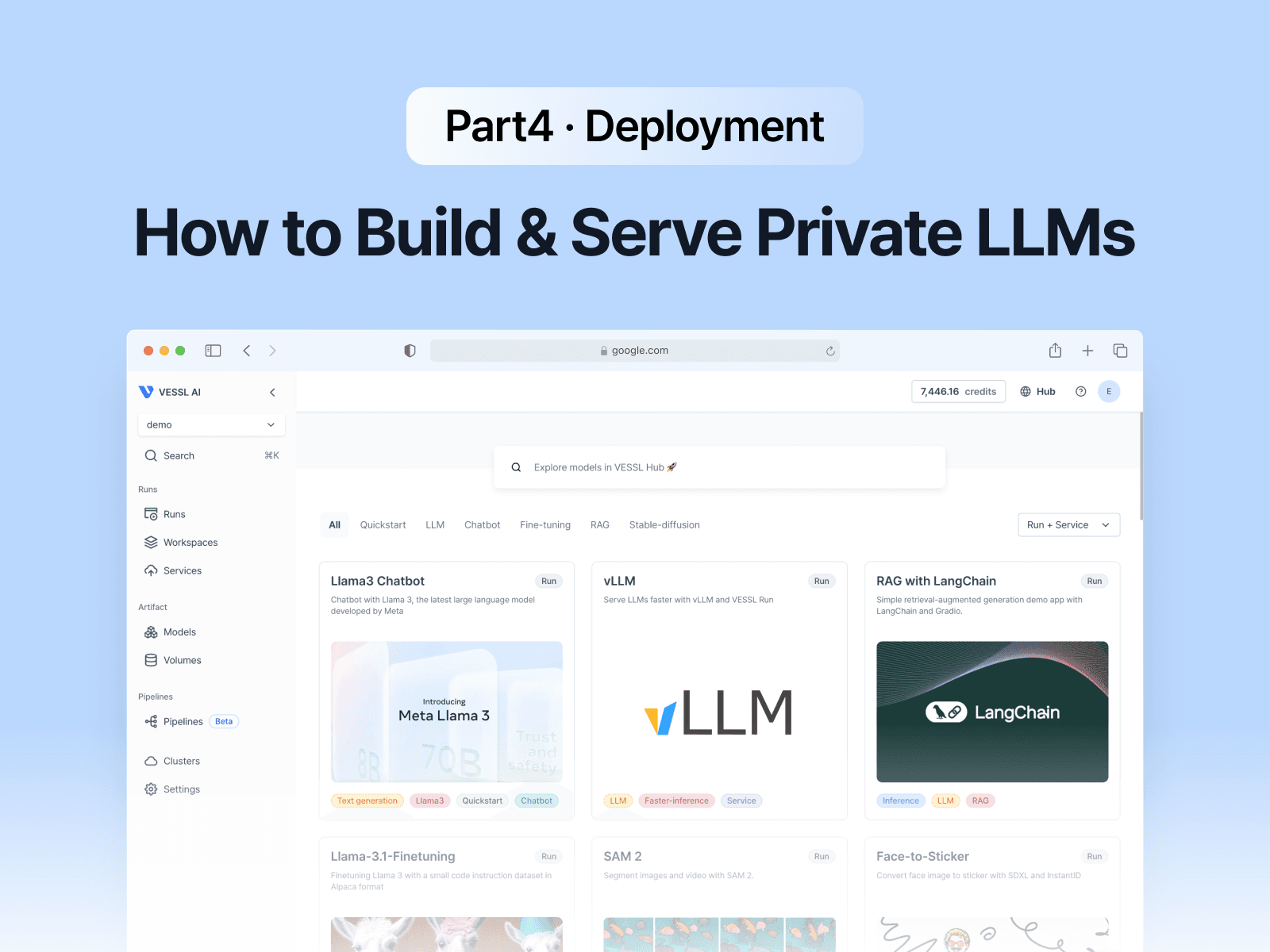

How To Build & Serve Private LLMs - (4) Deployment

This post delves deep into the deployment of LLMs.

This is the last article in a series on How to Build & Serve Private LLMs. You can read the previous posts here:

(2) Retrieval-Augmented Generation (RAG)↗

In our previous posts, we have discussed how to enhance LLM performance using techniques like RAG and fine-tuning. However, even with these optimizations, LLMs are inherently large and require significant computational resources for deployment. This post will explore two methods to make LLM deployment faster and more efficient: quantization and attention operations optimization.

Quantization

Quantization involves mapping numbers from a higher precision type to a lower precision type. For example, converting 32-bit floating-point numbers (float32) to 8-bit integers (int8). This reduces the range of representable numbers but also significantly decreases memory usage, making computations more efficient.

For LLMs, quantization reduces the model's weights to lower precision, which lessens GPU memory usage. Although this comes with a trade-off in performance, the impact on accuracy is generally minimal for large models. It's similar to how reducing the resolution of a high-quality image affects its quality less critically than reducing the resolution of a low-quality image.

Activation-aware Weight Quantization (AWQ)

Among various quantization techniques, Activation-aware Weight Quantization (AWQ↗) has gained popularity. AWQ selectively quantizes weights based on their importance, leaving the most crucial weights intact while converting the rest to 4-bit integers (int4). This approach preserves the top 1% of the most significant weights, ensuring the model retains performance close to its original state.

GPTQ

GPTQ is a post-training quantization technique where each row of the weight matrix is independently quantized to minimize errors. These weights are quantized to int4 but are restored to float16 during inference. This approach significantly reduces memory usage by 4 times, as the int4 weights are dequantized in a fused kernel rather than in the GPU’s global memory. Additionally, using lower bitwidths for weights reduces communication time, resulting in faster inference speeds.

Mixed Auto-Regressive Linear Kernel (Marlin)

Mixed Auto-Regressive Linear Kernel (Marlin) is an innovative mixed-precision inference kernel designed to optimize LLM performance. Combining float16 and int4 formats, Marlin achieves near-ideal speedups of up to 4 times for batch sizes between 16 and 32 tokens. It utilizes advanced techniques like asynchronous global weight loads and circular shared memory queues to minimize data bottlenecks and maximize computational efficiency. These optimizations ensure high performance and efficiency even under heavy workloads.

Optimizing Attention Operations

The self-attention mechanism used by transformer models is computationally intensive, requiring n^2 attention score calculations for a sequence of length n. Several techniques have been developed to optimize these operations. Here, we’ll discuss PagedAttention and FlashAttention.

PagedAttention

In self-attention, each token’s key and value vectors for all previous tokens must be calculated, which is inefficient if recomputed for every new token. KV caching stores these vectors in GPU memory to avoid redundant calculations.

Traditional LLM serving systems store KV caches in contiguous memory areas, leading to inefficiencies when the cache size fluctuates. PagedAttention↗ addresses this by partitioning the KV cache into blocks and storing them in non-contiguous virtual memory spaces, inspired by the operating system’s virtual memory and paging concepts. This method improves GPU memory utilization by avoiding the pre-allocation of large memory blocks.

FlashAttention

FlashAttention↗ is designed to handle large-scale attention operations more efficiently using strategies like tiling and recomputation. Tiling divides the large matrix operations into smaller blocks (tiles) processed sequentially, while recomputation avoids storing intermediate results in memory during the forward pass, recalculating them during the backward pass. These strategies reduce the number of read/write operations between the GPU’s High Bandwidth Memory (HBM) and SRAM, accelerating the attention computation.

Integrations

Thanks to the efforts of the open-source community, applying advanced quantization and attention optimization techniques has become much easier:

- Most LLMs supported by Hugging Face's Transformers library now include FlashAttention-based optimizations. You can find a list of model architectures that support FlashAttention in the Transformers documentation↗.

- vLLM↗ and Text Generation Inference (TGI↗) automatically load and use optimization techniques such as PagedAttention, FlashAttention, and Marlin when available. This ensures that your LLM deployments are as efficient as possible without additional configuration.

You can also easily host LLMs with these optimizations using VESSL. For more details, please refer to VESSL Hub↗.

Conclusion

In this post, we explored quantization and attention optimization techniques to make LLM deployment faster and more efficient. These strategies can significantly reduce computational costs and improve performance, making them valuable for building private LLMs. Thank you for reading, and we hope these insights help you in your projects!

Reference

- Achieving FP32 Accuracy for INT8 Inference Using Quantization Aware Training with NVIDIA TensorRT↗

- AWQ: ACTIVATION-AWARE WEIGHT QUANTIZATION FOR ON-DEVICE LLM COMPRESSION AND ACCELERATION↗

- GPTQ: ACCURATE POST-TRAINING QUANTIZATION FOR GENERATIVE PRE-TRAINED TRANSFORMERS↗

- Marlin GitHub repository↗

- vLLM: Easy, Fast, and Cheap LLM Serving with PagedAttention↗

- FlashAttention GitHub repository↗

Ian Lee

Solutions Engineer

Jay Chun

CTO

Wayne Kim

Technical Communicator

Try VESSL today

Build, train, and deploy models faster at scale with fully managed infrastructure, tools, and workflows.