Machine Learning

10 July 2024

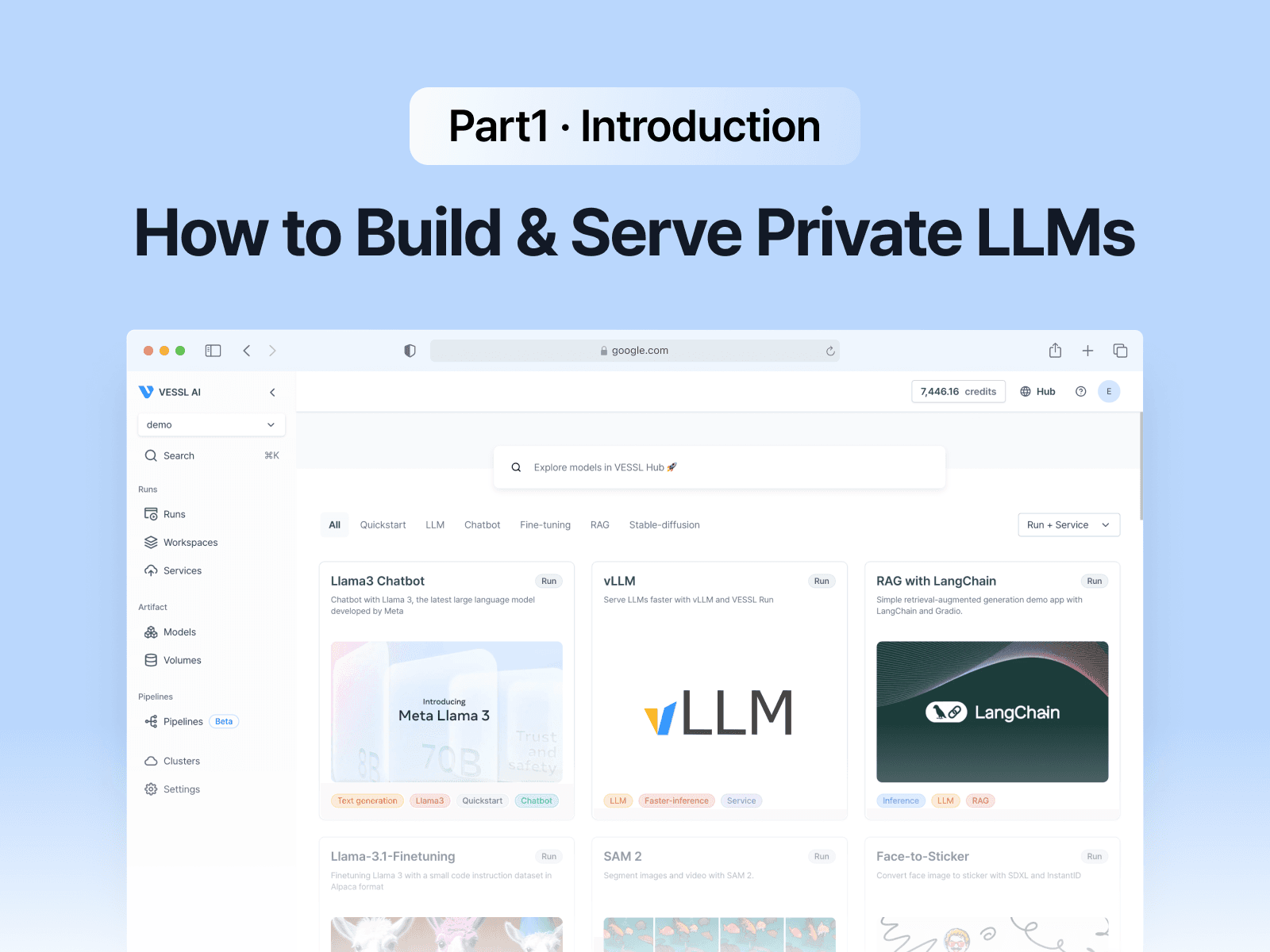

How To Build & Serve Private LLMs - (1) Introduction

How To Build & Serve Private LLMs - Introduction

This is the first article in a series on How to Build & Serve Private LLMs. We plan to dive deeper into the details in upcoming posts. Please stay tuned for upcoming posts!

Since the launch of OpenAI's GPT-3.5 and ChatGPT at the end of 2022, public interest in Large Language Models (LLMs) has significantly increased. This surge in interest has led to the development of various LLM-based services over the past year and a half.

Developers are enhancing their work efficiency with tools like GitHub Copilot, and some are using ChatGPT for learning English or writing. Additionally, image generation models like DALL-E and Midjourney are being used to add illustrations to content.

Challenges Faced by Businesses with LLM Services

However, for many companies, using public LLM services isn't always feasible. Common challenges on using LLM services in business include:

- Security issues: Sensitive data in sectors such as healthcare and finance cannot be exported outside data centers. Also, some company might have their networks isolated from the external environment.

- Model customization: Models customized for specific fields are often necessary because most large language models, which are pretrained on general data, may not provide the precise information needed in specialized areas, such as medicine, finance, coding, or law.

- Cost for LLM services: Typical LLM services charge more as usage increases, which can be economically burdensome for large-scale use.

In such cases, building your own LLM could be a solution. If you have enough computing resources and data, you can train your own language model with your own servers, making sure no data leave your data center. You can also customize the model in any way you want, and don’t have to worry about cost of using the model.

Strategies to Overcome Obstacles in Building LLMs

Building your own LLM, however, is not a straightforward job. As the term 'Large' Language Model suggests, even 'small' LLMs has billions of parameters (7-8B), requiring significant GPU resources and time to train. Even if you have sufficient resources for training LLMs, creating a model that outperforms recent ones like GPT-4 or Claude is a formidable challenge.

In this series, we will introduce three strategies to overcome these obstacles:

- Providing a 'cheat sheet' to the model using Retrieval-Augmented Generation (RAG) ↗ RAG is a model that combines a retrieval mechanism to fetch relevant documents or information from a large corpus to produce contextually accurate and informative responses with a generative model. This approach enhances the model's ability to generate more accurate and relevant answers by leveraging external knowledge sources.

- Enhancing model performance through fine-tuning

- Fine-tuning is the process of taking a pre-trained model and further training it on a specific dataset to adapt it to a particular task or domain, improving its performance for that specific use case.

- Reducing training and inference costs with techniques like quantization and attention operation optimization

Model quantization reduces the precision of a model's parameters to lower bit-widths, decreasing its size and computational requirements while maintaining performance. Attention operation optimization involves efficiently computing attention weights to reduce computational complexity and improve processing speed.

We will cover these topics in more detail in subsequent posts. Thank you for your interest and we look forward to sharing more insights!

Reference

Ian Lee

Solutions Engineer

Jay Chun

CTO

Wayne Kim

Technical Communicator

Try VESSL today

Build, train, and deploy models faster at scale with fully managed infrastructure, tools, and workflows.