Company

26 October 2023

VESSL AI at Google for Startups Accelerator: Cloud North America cohort

Sharing our time at Google's 10-week startup accelerator program and how it shaped VESSL AI's vision for AI cloud

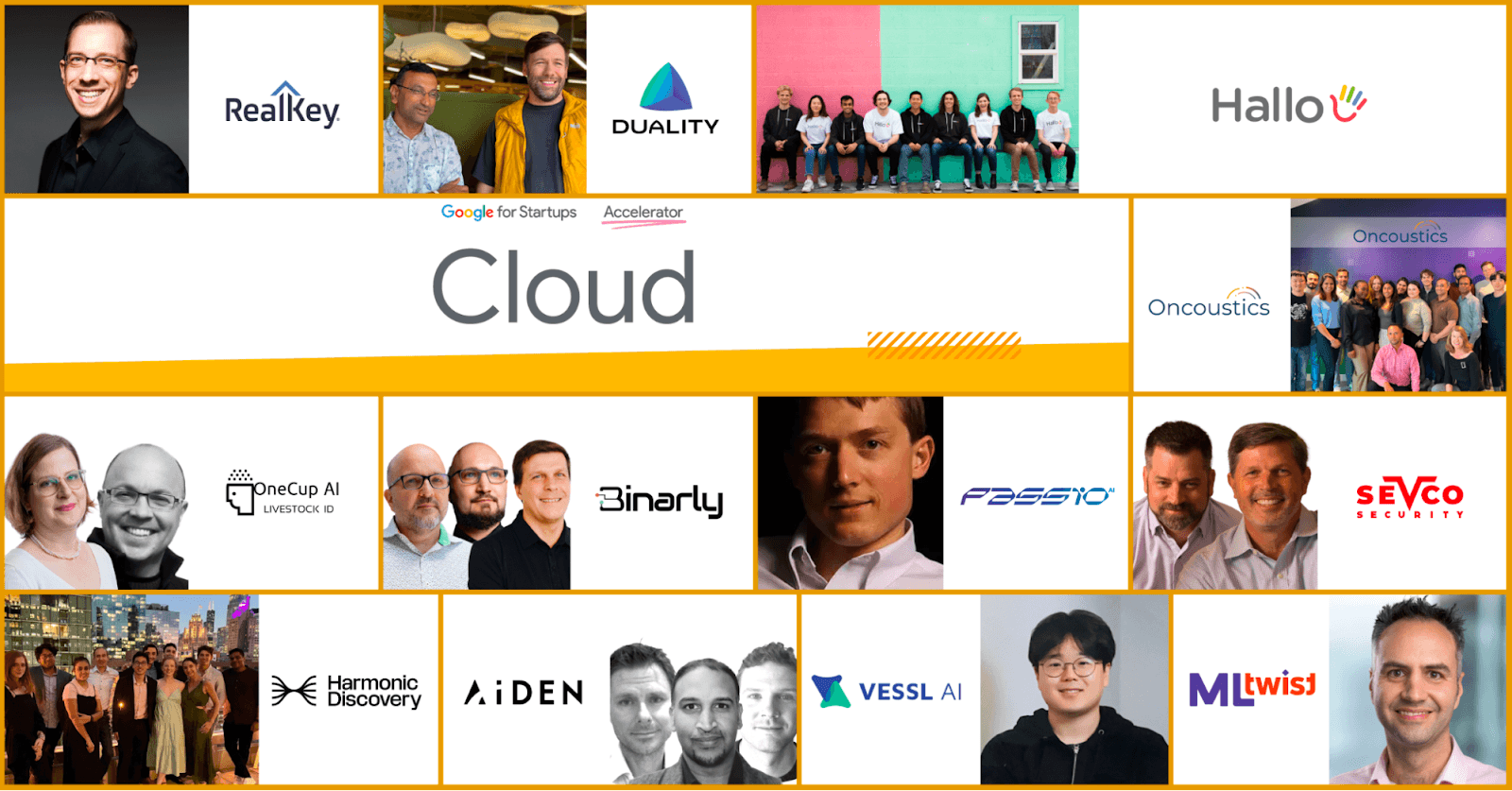

In June, we shared the exciting news that VESSL AI was selected as the first cohort of Google for Startups Accelerator: Cloud North America↗. It's a 10-week accelerator program for cloud-native technology startups headquartered in North America, providing cloud mentorship and technical project support on product design, cloud partnership, AI/ML, and more. Surrounded by fast-growing startups like Harmonic Discovery↗, OneCup AI↗, and RealKey↗, the program helped us gain a unique vantage point into the future of cloud technologies.

During our time in the accelerator, we observed a shared enthusiasm among our peer group startups for generative AI. Generative AI is undergoing a profound shift↗, poised to redefine technology platforms. Generative AI is not just a fleeting trend — it's "eating the world." The recent advancements in this domain, from the rise of ChatGPT to the proliferation of generative applications, underscore its transformative potential. With our mission to build the best MLOps infrastructure for developing AI faster at scale, we had the chance to share our goal to play a pivotal role↗ in this domain with the mentors and peers from the program.

Our interactions with Google Cloud and esteemed mentors like Peter Norvig↗ provided deep technical insights into the recent trends in AI.

Scalable AI workflows for LLMs: We delved into creating scalable AI workflows specifically designed to run Large Language Models (LLMs), ensuring efficient training and deployment across multiple GPUs.

Streamlining from training to deployment: We focused on creating a seamless transition from the training phase to deployment, ensuring that AI models are accurate and ready for real-world applications without unnecessary engineering bottlenecks.

Optimizing Computing Costs: We explored the advantages of a multi-cloud infrastructure, understanding how it can be leveraged to optimize computing costs and ensure businesses get the best value for their investment.

These insights, coupled with our observations and learnings from the program and our peers, have been pivotal in shaping VESSL AI upcoming feature developments, starting with providing a streamlined developer experience across hybrid cloud ML infrastructure↗.

- Scalability: Capable of handling up to 10,000 concurrent model training sessions.

- Reliability: 99.98% uptime, ensuring businesses face minimal disruptions.

- Cost-effectiveness: Potential savings of up to 70% on cloud computing costs.

We are proud to announce With the technical support from the Google Cloud team, we were also able to bring our support for Google Cloud in such a short time. Our managed cloud now partly relies on Google Cloud and teams can now bring their private Google Cloud account to VESSL AI with just a single command vessl cluster create.

The addition of Google Cloud to our list of partnerships, along with Samsung, Oracle, NVIDIA further strengthens our vision and commitment to build the MLOps infrastructure for developing AI faster at scale. Even after the accelerator program, we look forward to building on lasting partnership with Google Cloud and collaborating with its wide network of customers in the future.

Kuss

Yong Hee

Growth Manager

Try VESSL today

Build, train, and deploy models faster at scale with fully managed infrastructure, tools, and workflows.