Company

11 January 2022

VESSL AI — Heading into 2022

Announcing our $4.4M seed round and sharing what’s next

How we got here

AI-driven competitive advantage is no longer the future. It’s now. However, companies are still struggling to deliver the full business value of machine learning beyond experimentation. Models and datasets, and the required infrastructures to handle them, are more complex than ever before. Yet machine learning teams are still working outside the streamlined CI/CD pipeline without sophisticated tooling and standardized engineering practices.

In fact, I have seen far too many cases where many of my peers at top research labs and prospective customers at industry-leading companies rely on Slack, Google Spreadsheets, and scrappy scripts to manage ML workflows. Some attempt at developing internal platforms by integrating a myriad of open source tools but most fail due to a shortage of talents and steep overheads. The result? — the state-of-the-art prototype models developed on a researcher’s laptop are rarely deployed to production in scale. Even if they do get deployed, simple but important questions remain unanswered under this outdated workflow:

- How did we achieve 0.963 accuracy? Why can’t we train the same model and get similar results?

- Which version of ImageNet did we use? Which images did we add to the dataset?

- Why can’t we access the 4th node of the GPU cluster? How much are we paying AWS every month?

VESSL AI in 2021

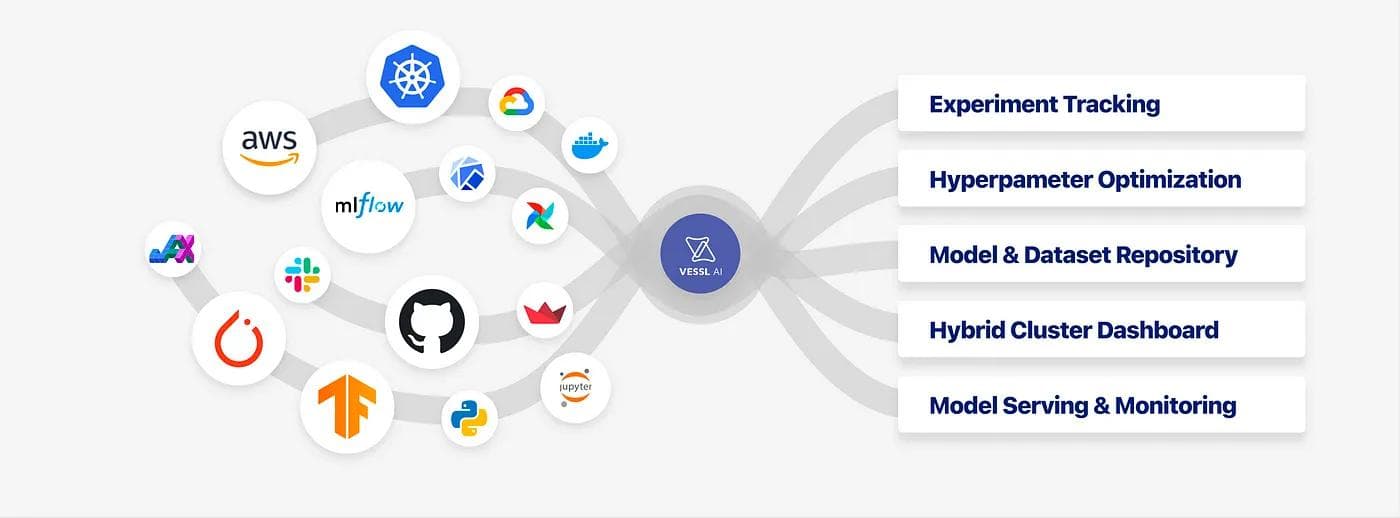

We started VESSL AI to help organizations tackle these challenges — to help them scale and accelerate their AI efforts by bringing modern workflow to machine learning. Under this mission, our team at VESSL AI has added a ton of features to the platform with three specific goals in mind:

1) Bring DevOps productivity throughout the ML lifecycle

- Experiment tracking: enhance visibility by logging all experiments on a central experiment tracking dashboard.

- Model registry: ensure reproducibility by storing all artifacts and pipeline history in a centralized repository.

2) Accelerate the path to production with automation

- Automated resource provisioning: maximize the use of computing resources by allocating resources dynamically across local and cloud clusters.

- Automated hyperparameter tuning: run hundreds of experiments in parallel and find the best hyperparameters.

3) Develop SOTA models at scale on a flexible infrastructure

- On-premise and hybrid deployments:**** save training costs on the cloud up to 80% using hybrid deployment and spot instances.

- Distributed training: take full advantage of GPU machines using distributed multi-node training.

We developed these features with ML professionals in mind. They require minimal-to-zero changes to their codes and come with powerful Python SDK and CLI commands. Further, teams have an added benefit of streamlining their workflows on a single, unified platform regardless of their development environment — from their local notebooks to scaling in GPU instances seamlessly.

During our closed beta, we have seen more than 500 machine learning engineers develop over 1,000 models on VESSL AI. We are also delighted to announce our $4.4M seed round co-led by KB Investment and Mirae Asset Venture Investment, with participation from A Ventures and Spring Camp. With this new round of financing, we look forward to growing our team to accelerate product development and expand our global footprint.

What’s Next

Heading into 2022, we have big plans to take MLOps further. With our public open beta planned for 2022 Q1, we are excited to meet and welcome more customers to VESSL AI. We also have plans to serve the greater machine learning community and the larger MLOps ecosystem:

- A public repository where ML enthusiasts can access the latest models and datasets and share reproducible experiments with the community.

- Powerful integration with other tools in the MLOps ecosystem that allows ML professionals to create a complete ML platform at ease from DevOps, DataOps, and to ModelOps.

Join us

We are still just at the very beginning of AI transformation and we would love to have more hands on deck. If you are passionate about machine learning and want to shape the next generation of machine learning tools, come join us! We are hiring for every position in Seoul and San Mateo. Check out our careers page for more details and feel free to reach out at people@vessl.ai.

Kuss

Try VESSL today

Build, train, and deploy models faster at scale with fully managed infrastructure, tools, and workflows.