Company

06 September 2024

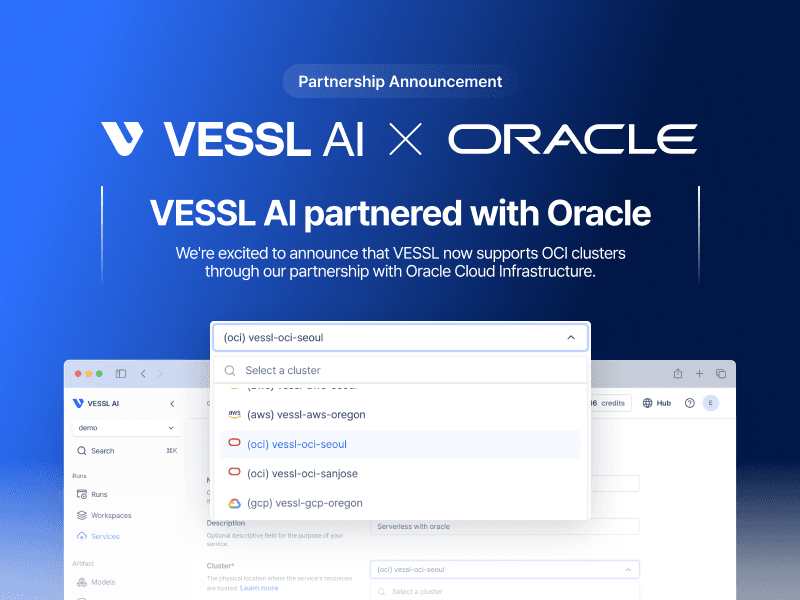

VESSL AI Partners with Oracle to Gear up the Next Era of MLOps

We are excited to announce that we have partnered with Oracle Cloud Infrastructure.

Key Takeaways

- We are delighted to announce that VESSL AI integrated with Oracle Cloud successfully.

- Users of VESSL AI can enjoy seamless provision and cost-efficiency while adhering to security and compliance.

- Try your inference and manage the ML lifecycle on VESSL↗ now.

To Begin With

MLOps acts as a critical bridge, connecting AI applications to a broad user base. As competition for compute resources intensifies among MLOps platforms, ensuring strong user engagement through stable and reliable compute resources is becoming increasingly important. We believe that delivering sustainable and stable high-quality computing resources will play a pivotal role in the future of AI/ML—a transformation that may already be underway. It is no exaggeration to say that the future success of AI applications, fine-tuned models, data providers, and MLOps depends on how effectively these compute resources are delivered and maintained for users. Reliable computing resources are indispensable for three key reasons:

- Users demand low latency when executing, deploying, or serving their services.

- Users seek cost-effective solutions.

- Users require high-security products.

In this rapidly evolving landscape, the ability to consistently meet these demands will determine which platforms lead the industry.

VESSL AI Meets Oracle

To start with a short explanation about VESSL AI, briefly, we are building an MLOps/LLMOps platform, a control plane for ML computing. Simply put, VESSL AI empowers users to manage their ML Lifecycle. To address the users’ aforementioned demands for MLOps, we needed OCI because they properly meet the requirements below:

3 key points in Oracle Cloud Infrastructure

- High Performance and Scalability: OCI is designed for high-performance computing backed by bare metal architecture and non-blocking dedicated networks. OCI provides scalable infrastructure to handle the most demanding workloads, including AI training, low-latency inference at scale, and data-intensive applications.

- Robust Security and Compliance: OCI offers comprehensive security features and compliance certifications, ensuring that data and applications are protected according to the highest industry standards.

- Cost-Effective Pricing: OCI provides a flexible pricing model with predictable costs, making it a cost-effective option for businesses of all sizes, especially for running complex enterprise applications.

As we run our multi-cloud scale MLOps platform, ensuring reliable compute resources is crucial. By utilizing OCI’s resources, we will be able to reach out to international users without boundaries, and users can do the same in return. Like OCI helps VESSL AI, we can support OCI by leveraging our advantage in the MLOps market. From a user-centric perspective, VESSL AI can function as a sidecar for OCI users to help expand their utilities. OCI benefits from this partnership by attracting AI-driven workloads, increasing the value of its ecosystem, and expanding its customer base, particularly in MLOps domains, strengthening its position in the cloud-based AI/ML market.

What Values Can VESSL AI Offer?

We enhance the process of building AI applications and support builders who are in the process of developing, have already developed, or wish to build with our platform. Our platform empowers ML engineers to create testable developments, reproducible deployments, and automated operations effortlessly. To achieve this, we offer a suite of products, including VESSL Run (for training jobs, inference with auto-scaling services, and launching experiments on Jupyter Notebook), Service (for deploying services with APIs), and Pipeline (for managing services with workflow automation).

To sum up, our key advantages are the following:

- Multi-cloud support — VESSL supports multiple cloud environments, including Google Cloud Platform (GKE), Amazon Web Services (EKS), and on-premise environments.

- Scalability — Our platform enables users to scale across hundreds of instances, whether for batch jobs, inference tasks, or other processes on the cloud. With VESSL Run and Workspace, users can launch numerous jobs simultaneously across multiple cloud environments and Jupyter Notebooks. This capability ensures that users can handle large-scale machine learning workloads efficiently and with flexibility, allowing them to meet the demands of growing projects.

- System Efficiency — We mitigate cold-start issues by minimizing the time it takes to launch services. With VESSL Serverless Mode, cold starts are significantly reduced (minimum 17 seconds, average 5 minutes), enabling users to enjoy low-latency inference and deployment.

By leveraging the most reliable cloud service providers, users can accelerate their services on VESSL. In particular, by adding OCI as a cloud service provider on VESSL, we unlock the following enhancements:

- Distribution — We reinforce our cloud support by ensuring a wide variety of computing resources, giving users more options to optimize their workloads based on their specific needs.

- Scalability — OCI allows us to provide relentless scalability, enabling users to provision resources at scale without compromising performance or availability. This ensures that even the most demanding AI/ML workloads can be managed effectively, no matter how large or complex they become.

- Cost Efficiency — By integrating OCI, we provide cost-effective resources that allow users to optimize their cloud spending while still accessing the high-performance computing power necessary for AI/ML applications. This ensures that users can scale their operations efficiently without incurring excessive costs, making VESSL an economical choice for businesses of all sizes.

VESSL AI, Embraces OCI

The integrations with OCI have been successfully completed. When visiting app.vessl.ai↗, you can now clearly see the clusters section displaying vessl-oci-seoul, vessl-oci-sanjose.

Similarly, in VESSL Run, Workspace, and Service, users can view OCI clusters and apply them to their services.

If you are an AI/ML service provider or engineer, you can easily utilize Run, Workspace, Service, and Pipeline on our service without any hassle.

What’s Next?

Since our founding in 2020, VESSL AI has been dedicated to developing a platform that fully manages the entire lifecycle of machine learning, enabling users to create services, run experiments, manage batch jobs, and handle operations seamlessly. At this point in time, we are honored to partner with Oracle Cloud Infrastructure and are filled with pride and joy. As a trusted partner, we will continue to accelerate the AI/ML industry by providing robust computing resources and an advanced MLOps platform for building AI applications. We will also focus on attracting users who seek cloud solutions and MLOps platforms for their services, expanding our reach across both platforms.

Wayne Kim

Technical Communicator

Try VESSL today

Build, train, and deploy models faster at scale with fully managed infrastructure, tools, and workflows.