Product

29 November 2023

Unveiling VESSL Run: Bringing Unified Interfaces and Reproducibility to Machine Learning

Discover VESSL Run: a versatile ML tool streamlining training and deployment across multiple infrastructures with YAML for easy reproducibility and integration.

Machine learning (ML) is an ever-evolving landscape, with new models and techniques emerging on a daily basis. While this progress is exciting, it poses a challenge: How do you know if a specific ML model is suitable for your business needs? The ideal scenario would be to instantly reproduce these models, fine-tune them using your data, and build a demo with identical resource configurations to test their efficacy.

Complicating matters further is the ongoing GPU shortage↗. For those considering an on-premise cluster, the upfront cost, management expense, and limited GPU capabilities are deterrents. Multiple cloud providers are an option, but learning the intricacies of each platform is time-consuming.

That’s where VESSL Run comes into play. This tool offers a unified interface across different infrastructures — be it on-premise or cloud — making it easier to train and deploy ML models. Let’s delve into how VESSL Run solves these problems.

Unified Interface Across Clusters

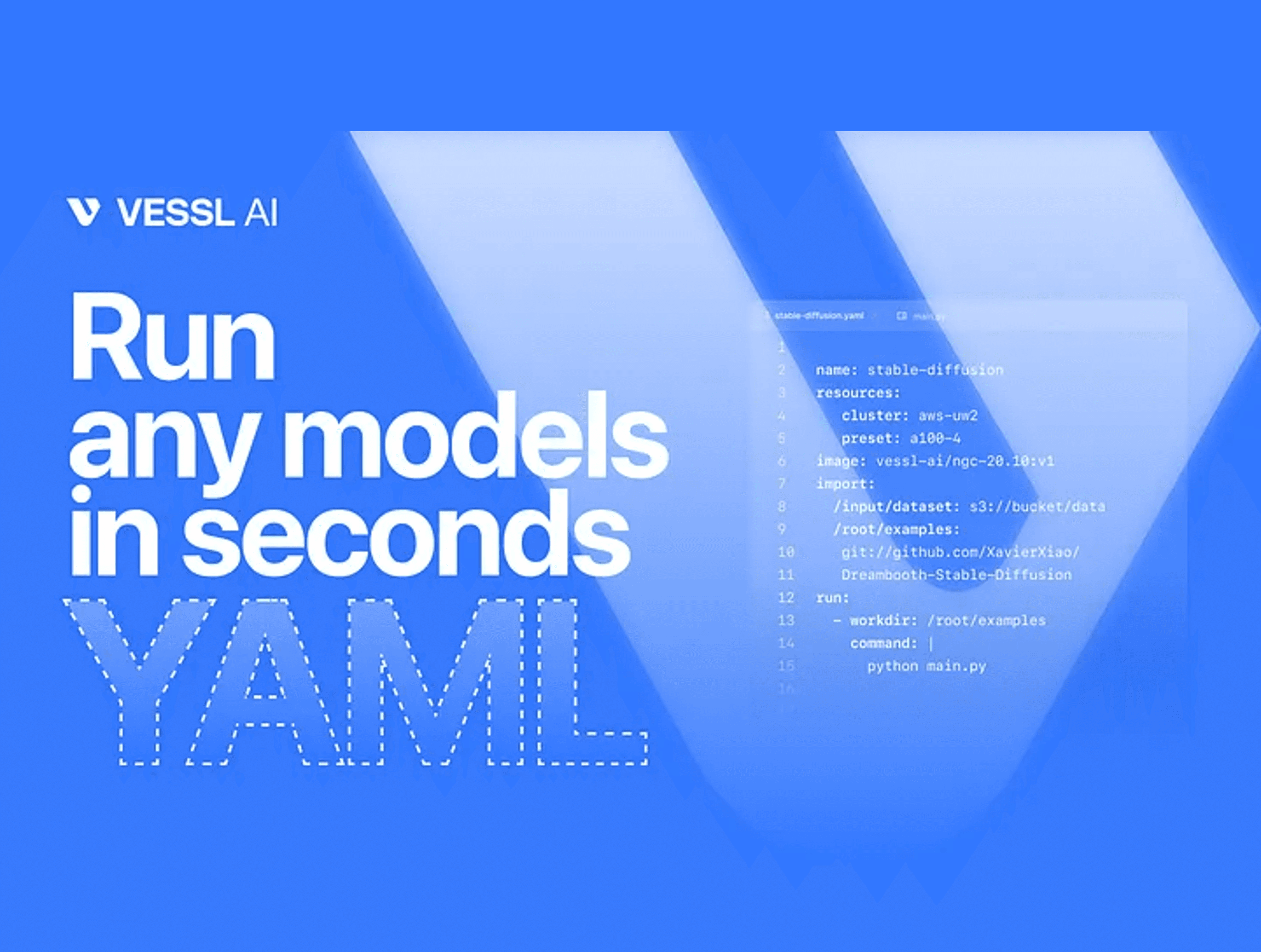

Whether you’re using AWS, Azure, GCP, or OCI, VESSL Run offers a way to interact seamlessly with all these cloud providers↗. Many organizations also maintain on-premise clusters for data security or compliance reasons. The answer to juggling these different infrastructures lies in a lightweight and powerful definition language: YAML.

YAML Definition: Bringing Order to Chaos

YAML offers several advantages↗ that make it indispensable in the world of ML:

- Portability & Version Control: YAML files can be easily version-controlled, fostering collaboration among team members.

- Bridging the Reproducibility Gap: YAML configurations ensure that replicating an exact model setup is straightforward, thereby eliminating any uncertainties in model behavior.

- Avoiding Guesswork: Detailed YAML configurations leave no room for ambiguity, making it clear what went wrong or what needs optimization.

name: llama2_c_training

description: Inference Llama 2 in one file of pure C.

resources:

cluster: aws-apne2

preset: v1.cpu-4.mem-13

image: quay.io/vessl-ai/ngc-pytorch-kernel:23.07-py3-202308010607

run:

- workdir: /root/examples/llama2_c/

command: |

wget <https://karpathy.ai/llama2c/model.bin>

gcc -O3 -o run run.c -lm

./run model.bin

import:

/root/examples/: git://github.com/vessl-ai/examplesThe YAML file is a primitive example of Declarative ML, designed to configure a machine learning job for running inference on a model called “Llama 2” in C language, developed by Andrej Karpathy (karpathy/llama2.c↗). This YAML configuration is executed on the VESSL platform and is designed to be easily shared and versioned, as per VESSL’s YAML capabilities. For an in-depth explanation of every available YAML key-value pair, you can consult the complete YAML reference on VESSL’s documentation↗.

Explore the following real-world scenarios to understand VESSL Run:

- Train a Thin-Plate Spline Motion Model with GPU resource↗

- Run a stable diffusion demo with GPU resources↗

Infinitely Customizable Integration Options

VESSL’s extensive integrations include:

- Internal VESSL Features: Such as VESSL Dataset, Model Registry, and VESSL Artifact.

- Code Repositories: GitHub, GitLab, and Bitbucket are directly integrated, allowing for seamless code deployment.

- Container Images: Support extends to private Docker Hub and AWS ECR, not just public images.

- Volume Operations: Whether you’re importing data from the web, mounting filesystems, or exporting results, VESSL makes it easy.

- Interactive Run: Use JupyterLab, SSH, or even Visual Studio to interact with your models.

Best of all, these integration options are fully compatible with YAML definitions, enhancing both ease of use and consistency.

We prepared few useful use cases to grasp a concept of volume operations:

VESSL Hub: A Repository of Cutting-Edge Models

VESSL Hub↗ hosts a curated collection of YAML files tailored for state-of-the-art open-source models such as Llama.c, nanoGPT, Stable Diffusion, MobileNerf, LangChain, and Segment Anything. Getting started is as simple as copying and pasting these YAML files. Install VESSL CLI↗ with pypi and run the yaml files you want.

pip install vessl

vessl run create -f config.yaml

VESSL Run Atomic Unit

VESSL Run isn’t just about integration and reproducibility. It offers a plethora of features that cover the entire ML pipeline:

- Hyperparameter Optimization

- Distributed Training

- VESSL Serve

- VESSL Pipeline

And that’s just scratching the surface. With VESSL Run, you get a one-stop solution for managing your ML models across various infrastructures.

Intae Ryoo

Product Manager

Try VESSL today

Build, train, and deploy models faster at scale with fully managed infrastructure, tools, and workflows.