Product

23 August 2024

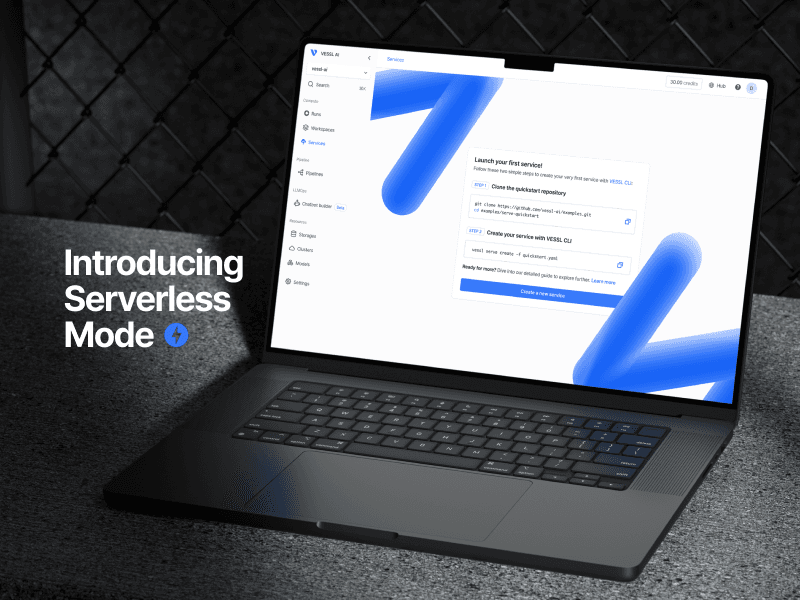

Introducing Serverless Mode — Zapping Ahead Fast Inference

Introduce “Serverless Mode," a new feature on VESSL AI

TL;DR

- We’re excited to introduce “Serverless Mode,” a new feature designed to help teams manage infrastructure effortlessly.

- Serverless Mode enhances scalability, simplifies deployment, minimizes cold starts (minimum 17s, average 5m), and improves cost efficiency by scaling to zero when not in use.

- Try Llama 3.1 with Serverless Mode today↗, and check out the demo video on our YouTube↗.

Background

VESSL AI has long empowered clients with fine-grained control over their inference processes through our Provisioned Mode↗. While this mode meets the user’s needs, we recognized a growing demand for a more streamlined solution for occasional, straightforward inference tasks. In response, we've developed our new Serverless Mode↗.

This innovation offers a hassle-free alternative for direct inference, simplifying the process for users who don't require the full customization of Provisioned Mode.

Meet Serverless Mode

On VESSL AI, Serverless Mode allows for the seamless and effortless deployment of your machine learning models. With this feature, you can perform model inference without the need to manage any infrastructure.

The biggest pain point

The primary issue with the current VESSL Provisioned Mode—and similar MLOps platforms—is that users want to pay only for the resources they actually use. However, the existing setup requires servers to stay running even when they’re not needed. Ideally, the system should scale to zero when not in use, allocating resources only when requests come in and then returning to a resource-free state afterward.

While the Provisioned Mode on VESSL offers a customizable environment necessary for many inference tasks, it can be inflexible, especially in operational situations with fluctuating inference needs.

Solution

To address these challenges, VESSL AI has enhanced the serverless deployment experience by focusing on the following:

- Implementing the scale-to-zero feature

- Maximizing usability while ensuring cost efficiency

- Streamlining the scaling process by avoiding unnecessary features

- Reducing the need for backend resources

- Minimizing cold start times

Here are the key benefits of Serverless Mode:

Key Features

1. Cheap — Cost efficiency with scale-to-zero

Serverless Mode maximizes cost efficiency by instantly allocating resources and environments as needed. With a pay-as-you-go pricing model, you only pay for the resources you use, ensuring budget-friendly scalability. The system scales to zero when not in use and activates only when a request is made, providing a significant cost advantage.

2. Easy — Automatic scaling

Scale your models in real time based on workload demands without the need for additional features to optimize the scaling process. VESSL AI automatically manages GPU allocation and reduces idle time, thanks to our VESSL-managed cluster with a variety of GPU resources and resource management mechanisms.

3. Quick — Simplified deployment

Minimal configuration is required, making deployment accessible to users regardless of their technical background. Users can enjoy hassle-free deployment without dealing with complex configurations.

4. Reliable and adaptable — High availability and resilience

Our built-in mechanisms ensure your models are always operational and resilient to failures. With an average startup time of just 17 seconds from the moment, a user presses the start button until the workload is up and running, we minimize delays in getting your services online. Even when pulling different images, the process averages only 5 minutes, ensuring your deployments remain quick and efficient. Combined with our robust backend infrastructure, we effectively mitigate cold starts and keep your services highly available.

The best part is that you can enjoy the benefits of cost efficiency with scale-to-zero, simplified deployment, and high availability, all through a single feature: our Serverless Mode.

Walkthrough

This part guides your overall understanding of how to use Serverless Mode, and you can also watch the demo video on our YouTube↗ channel.

Try Serverless Mode here↗.

Creating a service

First, visit app.vessl.ai↗ and navigate to the “Service” section, where Serverless Mode is available. Then, create a new service using a VESSL-managed cluster and enable the Serverless toggle.

Making a revision

After creating your serverless service, you can make revisions for the deployment. The following simple items are configuration for fast deployment:

- Resource: Select the GPU resources. (only VESSL-managed clusters are available)

- Container image: Choose either a managed VESSL-managed container image or bring a custom image from external sources.

- Task

- Commands: Provide the bash command to run the service. For example:

text-generation-launcher \\

--model-id $MODEL_ID \\

--port 8000 \\

--max-total-tokens 8192- Port: Open the HTTP port to connect with the container.

- Advanced Options: Add a model ID and specify its value.

With this simplified YAML configuration, you can create a scalable service from zero without experiencing cold starts.

HTTP requests

Once you’ve made a revision, you can send an inference request to the service you just created.

The above example shows a Python script for sending a request to the service.

- For endpoint information, you can refer to it by clicking the “Request” button.

- The Python script example is as follows:

base_url = "{your-service-endpoint}"

token = "{your-token}"

import requests

response = requests.post(

f"{base_url}/request/generate",

headers={"Authorization": f"Bearer {token}"},

json={

"inputs": "What is Deep Learning?",

"parameters": {"max_new_tokens": 1000}

}

)

print(f"response: {response}")

print(f"response json: {response.json()}")Scaling from Zero to One and Scaling Out with Auto-Terminate

After sending a request, a worker is activated, scaling from zero to one. Ten minutes later, VESSL AI automatically scales down to zero if there is no GPU usage. While designing Serverless Mode, VESSL AI’s Compute Squad team focused on helping customers reduce costs. Our team implemented an auto-terminate feature that deactivates the worker if no activity is detected for 10 minutes after activation. We hope this will help you when you forget to turn it off.

Conclusion

VESSL AI developed Serverless Mode in response to customer feedback, aiming to make it easily implementable on the VESSL AI platform using a unified YAML configuration. Since manually terminating workers every time is not resource-efficient from a user perspective, we introduced and enhanced the scale-to-zero. VESSL AI is always a step ahead, moving in sync with our customers’ needs. If you are interested in trying out our Serverless Mode, please visit app.vessl.ai↗.

Wayne Kim

Technical Communicator

Lidia

Product Manager

Try VESSL today

Build, train, and deploy models faster at scale with fully managed infrastructure, tools, and workflows.