Customers

03 January 2023

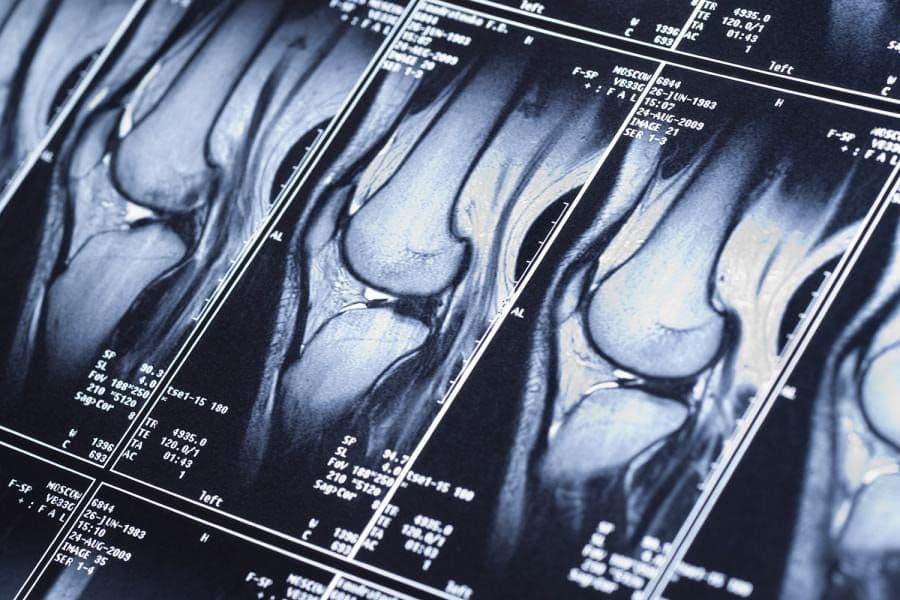

Seoul National University accelerates ML for MRI research with an open competition using VESSL AI

With VESSL Run, the participants at SNU fastMRI Challenge can focus on building state-of-the-art MRI reconstruction models

In 2019, Facebook AI Research (FAIR) and NYU Langone Health hosted the inaugural fastMRI Challenge↗ to make MRI scans up to 10X faster with AI. As part of the competition, NYU Langone Health released fully anonymized raw data and image datasets of 17K+ MRIs↗ to accelerate the clinical adoption of ML in MRI research broader machine learning community.

The annual campus-wide fastMRI Challenge at Seoul National University (SNU) hosted by the College of Engineering in conjunction with AIRS Medical — a medical AI startup founded by the winners of the 2020 FAIR-NYU fastMRI Challenge — succeeds this initiative. Every year, the competition brings together over 150 teams from 30+ disciplines to explore the latest trends in ML-accelerated MRI imaging and build state-of-the-art reconstruction models with real-world clinical data and powerful GPU resources dedicated to the challenge.

However, setting up a dev environment for research competition in machine learning is a complex engineering process. In the case of SNU, they had to provide easy access to campus-wide HPCs and large image datasets to 150+ teams, in addition to a Kaggle-like notebook environment.

The College of Engineering at Seoul National University worked with VESSL to get the research environment they needed. The organizers were able to simply get the event going instead of spending weeks setting up the infrastructure. With sufficient computing power at any time during the competition and instant access to large datasets, the participants could pour their full attention into advancing their MRI reconstruction models.

The Problem

Seoul National University needed a research environment that provides easy access to high-performance computing power and large MRI datasets

MRI reconstruction is both a GPU- and data-intensive task. The winning model of the 2020 fastMRI Challenge by AIRS Medical, for example, had 200M+ parameters trained on 4 NVIDIA V100s for 7 days. SNU wanted to scale that 150X — an environment in which 150+ teams could each train and optimize their own models.

The College of Engineering wanted to (1) allocate a limited number of 200+ mid-tier RTX 3080 GPUs fairly and efficiently, (2) set up object storage for >100GB MRI dataset with zero import time (3) and store all training metadata, and lineage to ensure reproducibility.

Before SNU worked with VESSL Run, competitors were assigned to a specific node of the GPU cluster which significantly limited them from exploring newer models, larger datasets, and more iterations. With just a bare metal storage system, they also had to download the 100GB datasets every time they launch notebook servers or training jobs. Most critically, organizers weren’t able to properly evaluate the final models as some of the models were not reproduced to the expected accuracy.

The Solution

VESSL Run provides the researchers with the ML infrastructure, tools, and workflow they need to advance their models

Using VESSL, SNU set up a highly scalable infrastructure for the fastMRI Challenge in just a few hours. The work began with configuring GPU clusters and storage systems at the campus-wide data center.

Teams were assigned with limited GPU hours — instead of nodes — which encouraged them to use GPUs more wisely — running containerized jobs instead of persistent notebook servers, for example. By doing so, SNU was able to secure idle nodes for more resource-intensive training and optimization tasks. With VESSL’s native support for Kubernetes hostPath volumes, teams no longer had to download >100GB datasets every time they run their notebook server or training jobs. SNU also used the experiment dashboard as a leaderboard that records all the model’s performance indexes and metadata, making all model submissions fully reproducible.

With the right infrastructure and tools, competitors naturally adopted more efficient and scalable workflows that maximized the model performance:

Train baseline models on fractional GPUs enabled by VESSL’s support for multi-Instance GPU (MIG).

Scale their models on HPCs using VESSL’s job scheduler and optimize them with hyperparameter optimization and distributed training.

Record the hyperparameters, runtime environment, and versioned datasets automatically with VESSL Run.

Having instant access to high computing powers and large datasets saved the researchers hours of wait time and allowed them to take full advantage of the HPCs than they could ever accomplish with bare metal. The streamlined workflow made it easier than ever for them to train high-performing ML models while also freeing up researcher time.

What’s Next

With the campus-wide adoption of VESSL, the College of Engineering at SNU achieves ML research outcomes faster

With the success of fastMRI Challenge, the College of Engineering at SNU now uses VESSL for graduate research and undergraduate AI/ML courses. Students now use VESSL to access the school’s GPU clusters in seconds and get multi-gigabyte datasets in zero wait time.

The easy access to the school’s ML infrastructure, together with VESSL’s guided workflows, has lowered the barrier to experiment with SOTA models — helping students even without CS backgrounds to try out AI-enhanced research and applications rapidly. This makes it possible for the researchers to explore interdisciplinary possibilities that simply weren’t feasible before like the use of AI in MRI scans.

For those who are already endeavoring the latest ML research, VESSL has made it easier to produce high-performing ML models. With VESSL, researchers are saving hours required to setup a research environment, run manual training and optimization tasks, and ensure reproducibility. By using VESSL Run, researchers across the departments at Seoul National University now spend more of their time advancing their ML research.

“As AI becomes more integral to engineering disciplines, helping our students and researchers easily access the tools and infrastructure required to practice machine learning at scale is also becoming central to the college. By using VESSL campus wide, we hope to advance AI research and education.” — Prof. Hong, Seoul National University Dean of Engineering

Yong Hee

Growth Manager

Try VESSL today

Build, train, and deploy models faster at scale with fully managed infrastructure, tools, and workflows.