Tutorials

24 November 2023

Deploying Docs AI with LlamaIndex, BentoML, and VESSL Serve

Build a minimally viable LLM-powered document Q&A app with VESSL AI

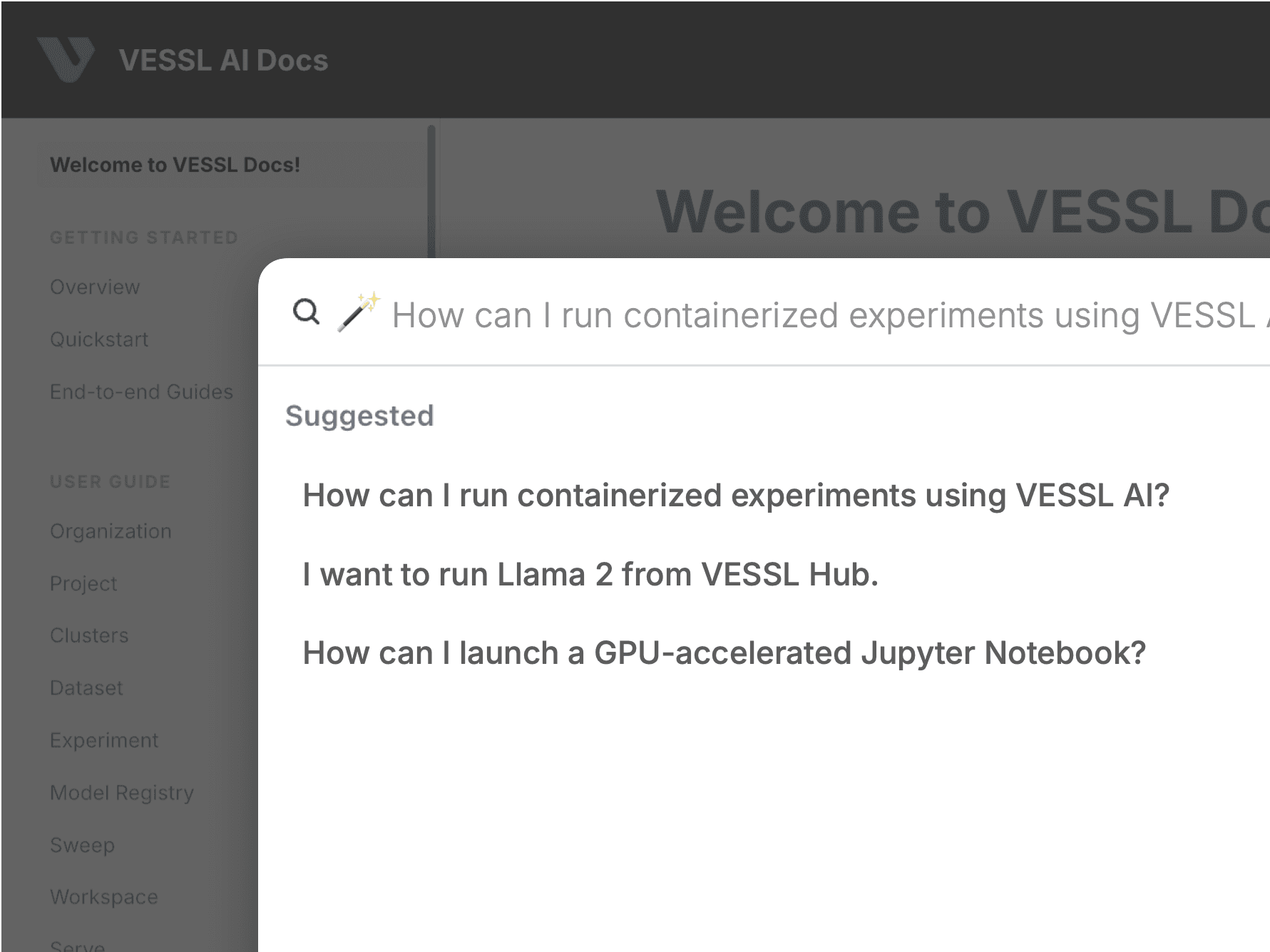

As developers, one of the most familiar use cases of “ChatGPT for X“ has been Docs AI↗, a virtual assistant that allows developers to pose natural language queries and get responses with a summary and links to relevant docs. These enhancements are helping developers to spend less time reading and more time building.

Across the industries, companies are exploring adopting similar, both internal and customer-facing, LLM-powered document Q&A chat, often with OpenAI APIs like the GPTs, Assistant, and Embedding. However, these APIs come with inherent limitations.

- Companies want to self-host the models and embeddings without third-party APIs and vendor lock-ins. Some even have to host them offline for privacy issues.

- These APIs add up in cost. For example, planned to be priced at $0.20 / GB / assistant / day, Assistants API↗ can quickly outpace the up-front investment for self-hosting LLMs.

In this post, we’ll walk through a quick recipe for a self-hosting LLM-powered Q&A chatbot. Here’s what we are going to use.

- We will use the Llama 2 pre-trained checkpoint and instructor-large↗ embedding model for our customized embedding. These two replace OpenAI APIs for GPT and Embedding models.

- We’ll use the raw markdown files for VESSL AI Docs↗ as our data and index and ingest them using LlamaIndex↗. Simply put, LlamaIndex acts as a data interface for our guide.

- We use VESSL Serve as our serving and deployment infrastructure. VESSL Serve abstracts everything you need to deploy a model — pulling models from the model registry, building web APIs, port forwarding, autoscaling, and more — into a single command.

1. Getting started

Preparing the model — Llama 2

The easiest way to start with pre-trained Llama 2 is to convert the model checkpoint to the Hugging Face interface using the following conversion script↗.

python src/transformers/models/llama/convert_llama_weights_to_hf.py \\

--input_dir /path/to/downloaded/llama/weights --model_size 7B --output_dir /output/pathTo prepare the model for deployment using VESSL Serve, upload the converted checkpoint to our model registry. You can do this by uploading your local file under Models or using our CLI command vessl model↗.

Preparing the dataset — VESSL AI Docs

Here, we will be using the raw text dataset↗ from our documentation↗. We’ve crawled our docs into separate .txt files and uploaded it on our S3. LlamaIndex is designed to take raw data like ours here; however, data preprocessing becomes essential when dealing with diverse or specialized data formats such as PDF and web crawls. For now, let’s upload the .zip file to VESSL Dataset. VESSL Dataset will help us record the lineage and snapshots of the dataset every time we run the model. This can also be done using our CLI command vessl dataset↗.

2. Configuring LlamaIndex

In serve.py↗, we’ve prepared a custom LlamaIndex session that pinpoints different values for pre-trained model, embedding model, dataset, and more by defining values like tokenizer_name, instructor_model_name, and documents.

We are pulling the instructor-large embedding model from Hugging Face. We’ve also set tokenizer_name and model_name as Llama 2 7B. You refer to other LLMs hosted on Hugging Face Models like Zephyr 7B by changing the value to HuggingFaceH4/zephyr-7b-beta. Lastly, we are also loading our dataset from our managed storage.

class InstructorEmbeddings(BaseEmbedding):

_model: INSTRUCTOR = PrivateAttr()

_instruction: str = PrivateAttr()

def __init__(

self,

instructor_model_name: str = "hkunlp/instructor-large",

instruction: str = "Represent a document for semantic search:",

**kwargs: Any,

) -> None:

self._model = INSTRUCTOR(instructor_model_name)

self._instruction = instruction

super().__init__(**kwargs)

...

documents = SimpleDirectoryReader("/docs/vessl-docs-dataset/").load_data()

...

llm = HuggingFaceLLM(

context_window=context_window,

max_new_tokens=num_output,

generate_kwargs={"temperature": 0.25, "do_sample": False},

query_wrapper_prompt=query_wrapper_prompt,

tokenizer_name="/data/llama-2-7b-hf",

model_name="/data/llama-2-7b-hf",

device_map="auto",

tokenizer_kwargs={"max_length": max_length},

# uncomment this if using CUDA to reduce memory usage

# model_kwargs={"torch_dtype": torch.float16}

)Since we are using HuggingFaceLLM to define our LLM, we can also play around with LLM-specific parameters like temperature, context_window (the size of the context window), num_output (the number of maximum output), chunk_size (the size of the text chunk). If you are working with small GPU memory size first uncomment torch.float16 and keep these values relatively low. Refer to the LlamaIndex document↗ to find out more about these parameters.

3. Serving our Docs AI

BentoML

With our LlamaIndex code all set, let’s make it serviceable using BentoML. Below, we are using BentoML's Runnable class. This Runner class defines our custom function generate which receives user input text and returns inference results. Then, we package the model into an API.

def __init__(self):

...

@bentoml.Runnable.method(batchable=False)

def generate(self, input_text: str) -> bool:

# set Logging to DEBUG for more detailed outputs

result = self.query_engine.query(input_text)

print("Query: " + input_text)

print("Answer: ")

print(result)

return result

llamaindex_runner = t.cast("RunnerImpl", bentoml.Runner(LlamaIndex, name="llamaindex"))

svc = bentoml.Service("llamaindex_service", runners=[llamaindex_runner])

@svc.api(input=bentoml.io.Text(), output=bentoml.io.JSON())

async def infer(text: str) -> str:

result = await llamaindex_runner.generate.async_run(text)

return resultVESSL Serve

Finally, we’ll set up the serving infrastructure using VESSL Serve. VESSL Serve abstracts the cloud infrastructure for serving large-scale AI models into a unified YAML definition. After defining the YAML, we can serve the model on the cloud with just a single command line.

name: llamaindex

image: quay.io/vessl-ai/ngc-pytorch-kernel:22.12-py3-202301160809

resources:

cluster: aws-apne2

preset: v1.v100-1.mem-52

import:

/examples: git://github.com/vessl-ai/examples

/model: vessl-model://vessl-ai/llama2/8

/docs: vessl-dataset://vessl-ai/vessl-docs

run:

- workdir: /data

command: |

pip install --upgrade pip

cd /examples/LlamaIndex

pip install -r requirements.txt

cd /data

tar -xvf llama2-7b-hf.gz

cd /docs/

tar -xvf vessl-docs.gz

cd /examples/LlamaIndex

pip install bentoml

bentoml serve serve_LlamaIndex:svc --timeout 180

ports:

- name: http

type: http

port: 3000

autoscaling:

metric: cpu

target: 50

min: 1

max: 3With resources, you can define the cloud or GPU specifications for the task. Here, we are using a V100 instance from our managed AWS Cloud. In case of peak usage, we also handled autoscaling. Under import, we are pulling the code from GitHub, and model and dataset from our managed model registry and storage which we uploaded previously. The YAML also opens up a dedicated port for inference tasks.

Finally, we can deploy the model into production using the following single-line command.

vessl serve revision create -f llamaindex.yaml --serving llamaindex --update-gateway --enable-gateway-if-off

4. Docs AI in action

After effectively submitting our serving workload on the cloud, you can see our Docs AI in action using the serving API window configured with BentoML. The Swagger UI page provided by BentoML provides a list of APIs you can use for our app. Here, we have a curl command that inputs a user query and uses the API to return generated texts.

curl -X 'POST' \

'http://model-service-gateway-mkl2xmoymih2.managed-cluster-apne2.vessl.ai/infer' \

-H 'accept: application/json' \

-H 'Content-Type: text/plain' \

-d 'How can I run experiments using VESSL?'You can also use Python to send requests.

import requests

import json

response = requests.post(

"http://model-service-gateway-mkl2xmoymih2.managed-cluster-apne2.vessl.ai/infer",

headers={

"accept": "text/plain",

"Content-Type": "text/plain",

},

data="How can I run experiments using VESSL?", # Replace here with your prompt.

)

print(json.loads(response.text)['response'])

What’s next?

In this guide, we explore how you can set up an MVP LLM-powered document Q&A with LlamaIndex, BentoML, and VESSL Serve. We’ve modified LlamaIndex to use a custom embedder instructor-large↗ alongside pre-trained Llama 2 7B. The dataset we used here is a minimally processed text files crawled from our documentation which exposes our Docs AI for hallucination. You can see our app in action at VESSL Hub↗.

In our next post, we will explore (1) how controlling parameters like the number of tokens and chunk size can mitigate hallucination, and (2) how companies can use our LLM Suite to easily process data to improve our app.

Yong Hee

Growth Manager

David Oh

ML Engineer Intern

SungHyun Moon

ML Lead

Try VESSL today

Build, train, and deploy models faster at scale with fully managed infrastructure, tools, and workflows.