Tutorials

14 March 2024

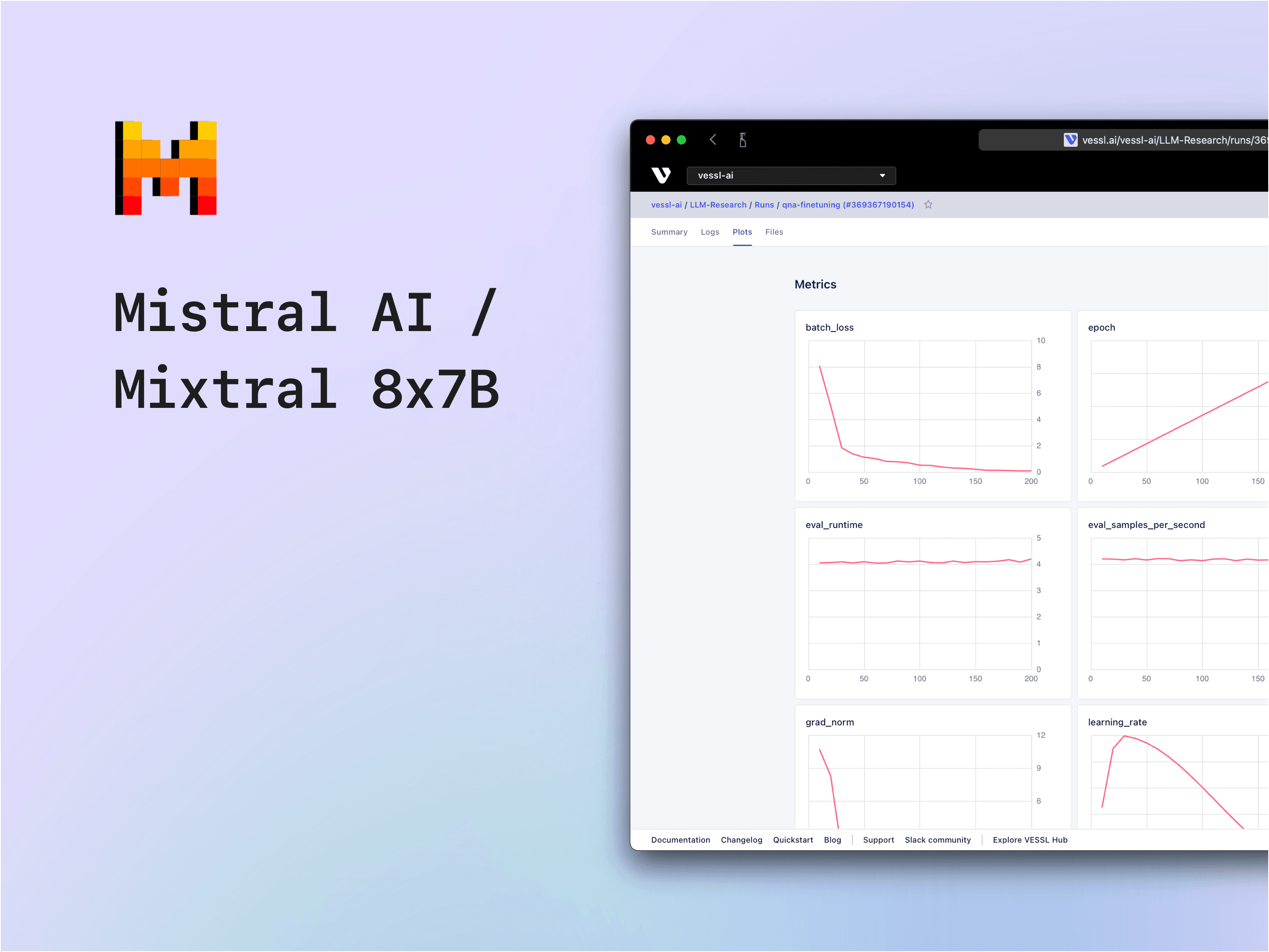

Fine-tuning Mixtral 8x7B with an LLM-generated Q&A dataset on a single GPU

Learn how to use VESSL Run to fine-tune Mixtral-8x7B with GPT-generated custom datasets

With the widespread use of LLMs, there is an increasing demand to fine-tune domain-specific LLMs. However, in most cases, fine-tuning tends to be done with datasets consisting of at least 500 samples, typically using publicly available datasets. However, to come up with dataset tailored to a specific domain, it usually requires creating it manually. This task is time-consuming and doesn’t always ensure quality consistency.

To overcome this, recent approaches have employed high-performance LLMs like GPT-4 to generate fine-tuning datasets. In this post, we will explore how you can use high-performance LLMs to create Q&A datasets. We’ll first generate Q&A datasets from our documentation, then fine-tune Mixtral 8x7B using the parameter-efficient tuning technique, based on this dataset. We’ll close off by comparing the responses from the (1) base model, (2) fine-tuned Mixtral 8x7B, and (3) Mixtral 8x7B with RAG and fine-tuning.

About the model — Mixtral 8x7B

Mixtral 8x7B is a Large Language Model released by Mistral AI, which expands the structure of a 7B model by utilizing a sparse mixture of experts (SMoE) to incorporate 8 experts. According to Mistral AI, Mixtral 8x7B outperforms Llama2-70B on most benchmarks while being 6 times faster in inference speed. In addition to the base Mixtral 8x7B model, Mistral.ai↗ has also released the Mixtral-8x7B-Instruct model, which is fine-tuned for instruction-following. In this posting, we will proceed with training based on the Instruct model, as we aim to fine-tune using a Q&A dataset. For more detailed information, refer to Mistral AI's blog post↗ and technical paper↗.

Generating the Q&A dataset with GPT-4 Turbo

To generate a Q&A pair dataset from VESSL Docs, we adapted the data generation algorithm↗ used by Alpaca.

Create 20 seed QA pairs.

Sample 3 of the generated seed QA pairs to write prompts.

Use the written prompts to generate another set of 20 QA pairs.

Compare the rouge scores of the newly generated QA pairs with the previously generated pairs, and only keep pairs with questions below a certain score.

Repeat steps 2-4 until a sufficient number of pairs are generated.

We used gpt-4-turbo-preview to generate these dataset and for our indexing embedding, we used LlamaIndex’s default OpenAIEmbedding to find appropriate contexts.

We repeated the step to get 500 samples and we were able to construct a dataset composed of 502 Q&A pairs. According to the Mistral tokenizer, the dataset has a total of 26,598 tokens with 52 tokens per sample. Each pair consists of approximately one sentence for the question and one to two sentences for the answer — for longer answers we could have lengthened the answer in the seeds and decrease the number of generated pairs instead.

The total cost for constructing the dataset was $2.22. This can vary depending on the embedding model and LLM. If cost is a main concern, you could resort to open-source embeddings↗ and running publicly available LLMs locally.

Here’s the YAML definition for generating the Q&A dataset. Add your OpenAI API key and try it out with the vessl run command.

name: qna-data-generation

description: Generate Q&A dataset using high performance LLM

tags:

- data-gen

resources:

cluster: vessl-gcp-oregon

preset: cpu-small-spot

image: quay.io/vessl-ai/python:3.10-r3

import:

/root/:

git:

url: <https://github.com/vessl-ai/examples>

ref: main

/vessl-docs/: hf://huggingface.co/datasets/VESSL/vessl-docs

export:

/outputs/: vessl-dataset://vessl-ai/VESSL-Docs-QnA-Dataset

run:

- command: |-

pip install -r requirements.txt

python generate_seed_qa_pairs.py \\

--output-dir . \\

--docs-dir /vessl-docs \\

--model gpt-4-turbo-preview \\

--temperature 1.0

python generate_qa_pairs.py \\

--output-dir ./outputs \\

--seed-qa-path ./seed.json \\

--docs-dir /vessl-docs \\

--n-pairs 500 \\

--model gpt-4-turbo-preview \\

--temperature 1.0

cp ./outputs/synthetic_data.json /outputs/vessl_docs_qna.json

echo "{

\\"vessl_docs_qna\\": {

\\"file_name\\": \\"vessl_docs_qna.json\\",

\\"file_sha1\\": \\"`sha1sum /outputs/vessl_docs_qna.json | awk '{ print $1 }'`\\",

\\"columns\\": {

\\"prompt\\": \\"question\\",

\\"response\\": \\"answer\\"

}

}

}" > /outputs/dataset_info.json

workdir: /root/llm-synthetic-data

ports: []

env:

OPENAI_API_KEY: YOUR_OPENAI_API_KEYFine-tuning Mixtral-8x7B with a custom dataset

Given that Mixtral 8x7B has approximately 46.7 billion parameters, training the model on a single GPU requires additional techniques like parameter efficient tuning (PEFT) techniques and quantization. Following Llama Factory’s hardware requirements↗, we trained the model using 4-bit quantization, and because full fine-tuning is not supported in 4-bit quantization mode, we used prominent PEFT methods such as LoRA and QLoRA.

For the training equipment, we used a single A100 80G. For the training framework, we used an implementation from Llama Factory↗. To keep track of the fine-tuning progress, we used our Python SDK vessl.log↗ to track key parameters. We trained the model with a batch size of 16 without accumulation over a total of 200 steps, applying gradient checkpointing. Here are the the YAML files for fine-tuning the model with LoRA and QLoRA.

name: qna-lora-finetuning

description: Finetune Mixtral with VESSL docs Q&A dataset

tags:

- mixtral

- finetuning

- lora

resources:

cluster: vessl-gcp-oregon

preset: gpu-a100

image: quay.io/vessl-ai/torch:2.2.0-cuda12.3-r4

import:

/root/:

git:

url: github.com/vessl-ai/examples.git

ref: main

/vessl-docs/: vessl-dataset://vessl-ai/VESSL-Docs-QnA-Dataset

export:

/outputs/: vessl-model://vessl-ai/Mixtral-VESSL-Docs-LoRA/1

run:

- command: |-

pip install -r requirements.txt

python scripts/update_dataset_info.py --path /vessl-docs

python src/train_bash.py \

--stage sft \

--do_train \

--output_dir /outputs \

--model_name_or_path mistralai/Mixtral-8x7B-Instruct-v0.1 \

--fp16 \

--quantization_bit 4 \

--double_quantization False \

--dataset vessl_docs_qna \

--template mistral \

--dataset_dir llama-factory/data \

--dataloader_num_workers 8 \

--finetuning_type lora \

--lora_alpha 16 \

--lora_rank 8 \

--lora_dropout 0.01 \

--lora_target all \

--learning_rate 5e-5 \

--adam_beta1 0.9 --adam_beta2 0.95 \

--weight_decay 0.01 \

--lr_scheduler_type cosine \

--warmup_ratio 0.1 \

--per_device_train_batch_size 16 \

--gradient_accumulation_steps 1 \

--val_size 0.1 \

--max_steps 200 \

--logging_steps 10 \

--evaluation_strategy steps \

--eval_steps 10 \

--save_steps 25 \

--save_only_model

workdir: /root/llama-factory

ports: []

name: qna-qlora-finetuning

description: Finetune Mixtral with VESSL docs Q&A dataset

tags:

- mixtral

- finetuning

- qlora

resources:

cluster: vessl-gcp-oregon

preset: gpu-a100

image: quay.io/vessl-ai/torch:2.2.0-cuda12.3-r4

import:

/root/:

git:

url: github.com/vessl-ai/examples.git

ref: main

/vessl-docs/: vessl-dataset://vessl-ai/VESSL-Docs-QnA-Dataset

export:

/outputs/: vessl-model://vessl-ai/Mixtral-VESSL-Docs-QLoRA/1

run:

- command: |-

pip install -r requirements.txt

python scripts/update_dataset_info.py --path /vessl-docs

python scripts/update_dataset_info.py --path /vessl-docs

--stage sft \

--do_train \

--do_eval \

--output_dir ./outputs \

--model_name_or_path mistralai/Mixtral-8x7B-Instruct-v0.1 \

--fp16 \

--quantization_bit 4 \

--double_quantization True \

--quantization_type nf4 \

--dataset vessl_docs_qna \

--template mistral \

--dataset_dir llama-factory/data \

--dataloader_num_workers 8 \

--finetuning_type lora \

--lora_alpha 16 \

--lora_rank 8

--lora_dropout 0.01 \

--lora_target all \

--learning_rate 5e-5 \

--adam_beta1 0.9 --adam_beta2 0.95 \

--weight_decay 0.01 \

--lr_scheduler_type cosine \

--warmup_ratio 0.1 \

--per_device_train_batch_size 16 \

--gradient_accumulation_steps 1 \

--val_size 0.1 \

--max_steps 200 \

--logging_steps 10 \

--evaluation_strategy steps \

--eval_steps 10 \

--save_steps 25 \

--save_only_model

workdir: /root/llama-factory

Comparing results

Here are the train loss, training time, and memory consumption for our two fine-tuning methods.

LoRA — training loss 1.1743, training time 00:08:15.43, memory consumption 32355 MB

In the experiments, both techniques showed similar learning patterns. The training time for LoRA was approximately 17 seconds shorter, while memory usage for QLoRA was about 2GB less. QLoRA employs double quantization to reduce memory usage, which is likely why it sacrifices training speed.

Here’s an example of the generated results.

How can I create a new model via web?Answer by base Mixtral

To create a new model via the web, you would typically use a web framework to build a user interface that allows you to define and create the model. The specifics of how to do this will depend on the web framework and programming language you are using. Here is a general outline of the steps you might take:

1. Choose a web framework: There are many web frameworks available for different programming languages. Some popular choices include Django (Python), Ruby on Rails (Ruby), and Express (JavaScript).

2. Create a new model class: In your web framework, you will typically define a new class that represents the model. This class will define the properties and behaviors of the model.

3. Connect to a database: You will need to connect your model to a database so that you can store and retrieve instances of the model. Many web frameworks have built-in support for connecting to databases.

4. Create a form for the model: In order to create a new instance of the model, you will need to create a form that allows the user to input the necessary data. This form will typically be generated automatically by the web framework based on the model class.

5. Handle the form submission: When the user submits the form, you will need to handle the submission by creating a new instance of the model and saving it to the database.

6. Display the new model: Once the new model has been created, you will need to display it to the user. This might involve displaying a confirmation message or redirecting the user to a page that shows the new model.

Here is an example of how you might create a new model in Django:

1. Define a new model class in a file called `models.py`:

```

from django.db import models

class MyModel(models.Model):

name = models.CharField(max_length=100)

description = models.TextField()

```

1. Connect to the database by adding the following to the `settings.py` file:

```

DATABASES = {

'default': {

'ENGINE': 'django.db.backends.postgresql',

'NAME': 'mydatabase',

'USER': 'myuser',

```Answer by Mixtral finetuned with LoRA

To create a new model, go to the "Models" section, click on "New Model", and fill out the required information.Answer by Mixtral finetuned with QLoRA

To create a new model, go to the "Models" section, click on "New Model", and fill out the required information.The base Mixtral model provided arbitrary instructions, lacking product-specific contexts. In contrast, the fine-tuned model recognizes that the question refers to our product’s model repository and provided instructions on creating a new model repo.

Answer by RAG with base Mixtral

You can create a new model in two ways via the web:

1. From the model repository page:

* Click the `New Model` button.

* Set the model description and tag.

* Choose the desired directory to put the model.

2. From the experiment detail page:

* Click the `Create Model` button under the `Actions` button.

* Select the model repository.

* Set the model description and tag.

* Choose the desired directory among the output files of the experiment.

* Include or exclude specific directories in the output files checkbox section.Answer by RAG with Mixtral finetuned with LoRA

1. Create a model from the model repository page by clicking the New Model button, setting the model description and tag, finding the experiment you want, and choosing the desired directory.

2. Create a model from the experiment detail page by clicking the Create Model button under the Actions button, setting the model description and tag, and choosing the desired directory among the output files of the experiment.Answer by RAG with Mixtral finetuned with QLoRA

1. Create a model from the model repository page by clicking the New Model button, setting the model description and tag, finding the experiment you want, and choosing the desired directory.

2. Create a model from the experiment detail page by clicking the Create Model button under the Actions button, setting the model description and tag, and choosing the desired directory among the output files of the experiment.Both the base and fine-tuned model with RAG provided accurate answers. However, while Mixtral listed the answer in multiple steps, the fine-tuned model was explained in a single sentence without line breaks between the two steps. This pattern likely follows the format of the data used for fine-tuning, which consisted of 1-2 sentences.

Conclusion

Here, we've discussed how you can use GPT-4 to generate domain-specific Q&A datasets and fine-tune Mixtral 8x7B for improved performance. This showed the significance of fine-tuning in enhancing a model's contextual understanding, and the performance trade-off between the two go-to methods like LoRA and QLoRA.

We also saw how RAG further enhances the model’s contextual awareness, albeit with stylistic differences influenced by the training dataset's structure. This underscores the fine-tuned model's adeptness at adhering to the instruction dataset.

You can build on this experimentation and explore more by visiting our model hub.

Sanghyuk

ML Engineer

Yong Hee

Growth Manager

Try VESSL today

Build, train, and deploy models faster at scale with fully managed infrastructure, tools, and workflows.