Customers

08 January 2024

Scatter Lab — Building more human sLLM & Personal AI with VESSL AI

VESSL AI’s end-to-end LLMOps platform helps Scatter Lab scale Asia’s most advanced sLLM "Pingpong-1"

Founded in 2011, Scatter Lab is the developer behind South Korea’s leading Chatbot service “Iruda”. In 2022, with the rise of ChatGPT, Scatter Lab released Iruda 2.0, a Personal AI built on its latest large language model “Pingpong-1”. Since its release, Iruda has been downloaded over 2 million times and processed 900+ million messages — equivalent to about 64 messages per day per user, a metric similar to the country’s most widely used messenger app.

With the recent success of Iruda 2.0 and the decade-long expertise in developing chatbots, Scatter Lab has expanded its business to the B2B domain, providing custom-built LLMs and Assistant AI for Enterprise. Its latest release includes “Pingpong Studio”, where companies can quickly and securely test their proprietary datasets on Scatter Lab’s pre-trained LLMs and explore different characters for their chatbots. As Scatter Lab scaled its LLM initiatives, VESSL AI provided the critical LLMOps infrastructure for exploring, fine-tuning, and deploying LLM & Generative AI applications.

Adding human qualities to LLM — cost-effectively, without compromising data privacy

With the rise of production-grade open-source LLMs like Llama 2 and Mistral 7B, companies are rushing to develop custom, industry- and purpose-specific LLMs with their proprietary datasets. An increasing number of companies like Scatter Lab are fine-tuning these models rather than being locked into OpenAI’s GPT-3.5 or GPT-4 APIs,

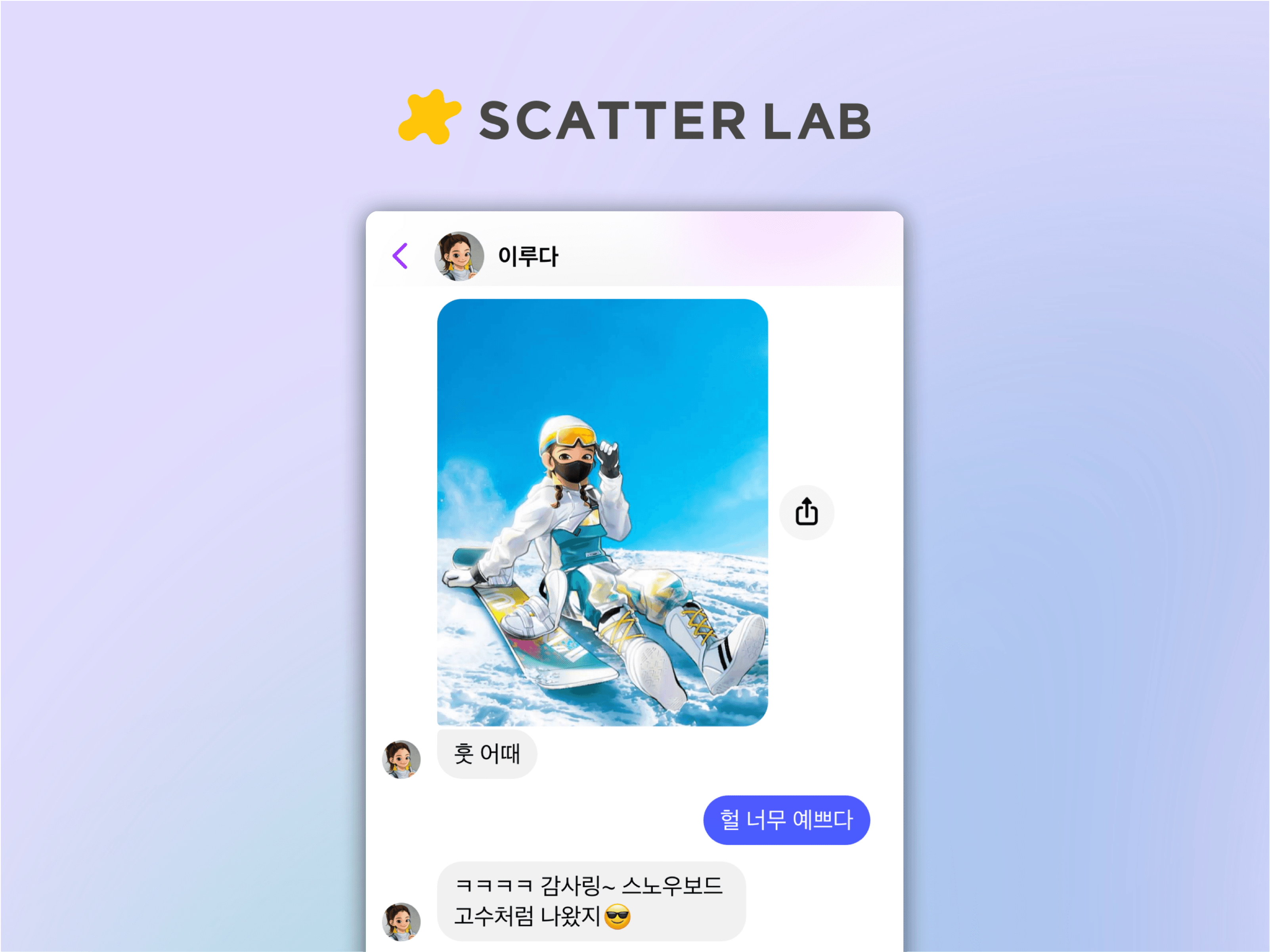

Scatter Lab was looking to develop a “more human” AI assistant. While ChatGPT far exceeds any other current LLMs in reasoning, it lacks the emotional and personal qualities required as an AI companion. For Iruda, Scatter Lab wanted to go beyond question and answering and replicate the experience of talking to a friend on a messenger. For example, Iruda responds to your messages with emojis and memes and even gives a few minutes before responding. The experience is further assisted by the multi-modality and proactivity of PingPong-1.

- Multi-modality — Iruda can understand and respond to user inputs with multimedia content.

- Proactivity — Iruda will talk to users proactively before a user begins a new conversation.

In addition to a more empathetic model, Scatter Lab also had practical reasons to train and fine-tune its LLM — privacy and cost.

- Data privacy & protection — Scatter Lab worked with an internal zero-tolerance policy on data privacy and data protection. Avoiding any chance of leaking training data meant not relying on OpenAI’s GPT and other third-party model APIs. Scatter Lab chose VESSL AI to build a secure, hybrid cloud infrastructure built on private clouds and their bare metal GPUs.

- Inference & GPU cost — Given (1) the sheer volume of the dataset — 900+ million cumulative messages, (2) how a single conversation is composed of 2,000-3,000 tokens, and (3) the per 1K token price of GPT-3.5 at $0.003, the cost of inference alone can cost up to millions per month, not to mention the $0.008 per 1K token cost of fine-tuning the model. Scatter Lab wanted to reduce the long-term inference cost by building its custom model and chose VESSL AI to minimize the GPU cost for fine-tuning PingPong-1 by up to 40%.

Infrastructural challenges of fine-tuning LLMs

Building on an open-source sLLM, Scatter Lab wanted to bring PingPong-1 to life through SFT(Supervised fine-tuning) and RLHF(Reinforcement learning from human feedback). However, as the team transitioned from traditional NLP models to LLMs, Scatter Lab faced different scales of infrastructural challenges.

- GPU scarcity — With the global GPU shortage especially in HPC-grade graphic cards, Scatter Lab had to file through multiple clouds and multiple regions. A100 80GB, the specific GPU that Scatter Lab needed to fine-tune an LLM was essentially sold out in major clouds.

- GPU Optimization — While Scatter Lab needed every bit of FLOPS, the utilization of their existing on-premise A100 clusters and hard-earned GPU instances used by 20+ machine learning engineers was down at 10%.

- Scalability — Scatter Lab was relying on GPU notebooks. To transition from a prototype to a GPT-scale, production-grade LLM, Scatter Lab needed a scale & reliable infrastructure that could sustain days of fine-tuning and high-frequency inference.

Leveraging LLMOps infrastructure to accelerate time-to-deployment and optimize GPU utilization by 4X

Scatter Lab chose VESSL AI as its LLMOps infrastructure to fine-tune Pingpoing-1 cost-effectively, faster at scale. Scatter Lab saw the impact of leveraging leveraging LLMOps infrastructure from Day 1, starting with GPU procurement & optimization.

Reducing GPU lead time from weeks to seconds

Until now, Scatter Lab faced difficulties in obtaining NVIDIA A100 80GB and H100 80GB GPUs. This scarcity hindered the team from fine-tuning Pingpoing-1 even after acquiring the right dataset and completing the research on fine-tuning techniques. Through VESSL Clusters, ScatterLab could instantly check the availability of instances across multiple clouds such as AWS, GCP, Azure, Oracle, CoreWeave, and Lambda Labs, and immediately execute GPU-accelerated fine-tuning tasks with the vessl run command.

Optimizing GPU usage by 4X

Scatter Lab’s GPU usage both on-cloud and on-premise stayed between 10~20%. By (1) integrating multiple clusters to VESSL Cluster’s single control plane, (2) packaging Jupyter notebooks into containerized runs, and (3) dynamically allocating GPUs across these runs, Scatter Lab was able to increase GPU utilization to an average of 80%. Together with VESSL AI’s native support for distributed training and autoscaling, GPU optimization helped the team to run large-scale fine-tuning workload effectively without the cost.

GPT-scale fine-tuning & deployment in just a single command

With VESSL Run, Scatter Lab was able to simplify instance setup, runtime configuration, and volume mount into just a single YAML file & a vessl run command. This allowed the team to just focus to iterate faster with multiple models and datasets. Further, once completing fine-tuning, Scatter Lab was able to deploy and test these models in production simply by adding a few key-value pairs required for creating endpoints and opening up ports.

End-to-end AI infrastructure from Days to minutes

Behind the these YAML files, VESSL Run supports features like distributed training, automatic failover, and autoscaling, enabling faster time-to-production of large-scale AI models. Fine-tuning LLMs can take several days, during which instances might terminate prematurely, leading to the loss of models trained with significant investment. Building infrastructure to handle such failures requires at least six months of company-wide investment. Scatter Lab was able to build on a robust end-to-end AI infrastructure from Day 1 by adopting VESSL AI.

MLOps in the era of Generative AI & LLMs

As demonstrated by VESSL AI’s collaboration with VESSL AI, the importance of large-scale AI & MLOps infrastructure is greater than ever before. Production-grade open-source LLMs & Gena I like Llama 2, Mistral 7B, and Stable Diffusion have largely commoditized the model stack, and yet the infrastructural backbone for fine-tuning and deploying these models is becoming more complex and requires different stack from traditional AI/ML.

VESSL AI is excited to work with the fastest-growing Generative AI companies like Scatter Lab and Wrtn Technologies, and provide the mission-critical AI infrastructure to help them scale without the cost.

Yong Hee

Growth Manager

Try VESSL today

Build, train, and deploy models faster at scale with fully managed infrastructure, tools, and workflows.